Shibani Santurkar

@ShibaniSan

@OpenAI

ID:2789364932

https://shibanisanturkar.com/ 04-09-2014 07:59:16

146 Tweets

2,9K Followers

184 Following

Dear Embassy team, I am an Indian citizen studying in San Diego. I misplaced my passport while travelling from US to Greece via Canada. I am in contact with the consulate in Vancouver but desperate for help.

IndiainToronto

EmbassyIndiaDC Ppt

Indian Diplomacy

Dr. S. Jaishankar (Modi Ka Parivar)

Very cool work by Tatsunori Hashimoto and colleagues: ask LLMs questions from Pew Surveys in order to measure whose opinions the model's outputs most closely reflects.

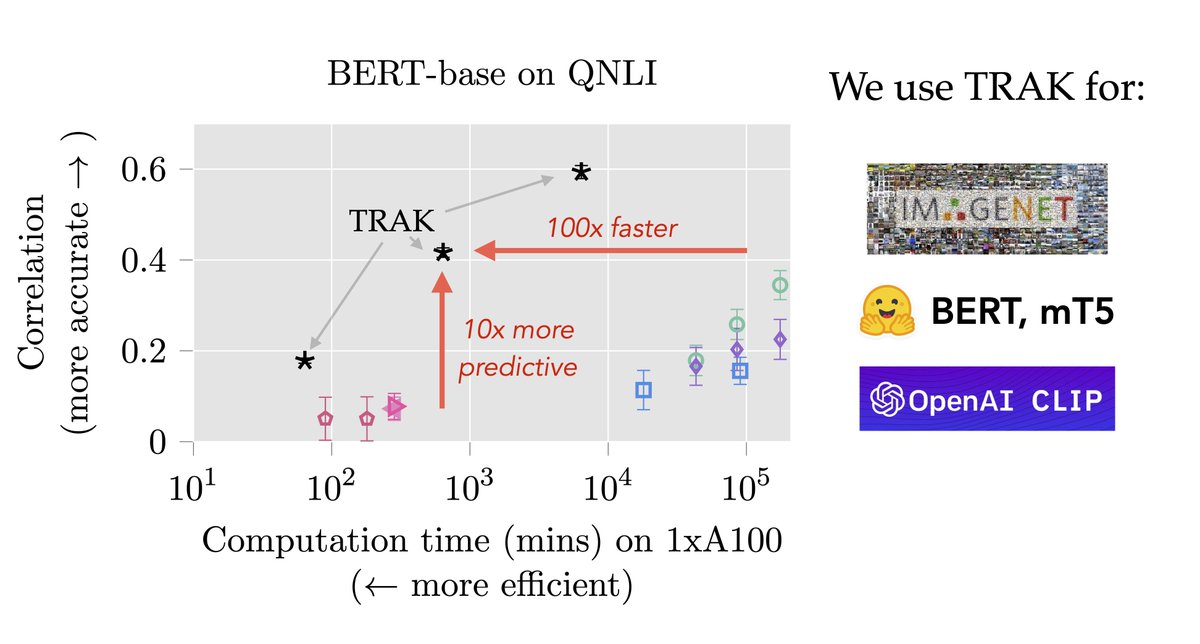

As ML models/datasets get bigger + more opaque, we need a *scalable* way to ask: where in the *data* did a prediction come from?

Presenting TRAK: data attribution with (significantly) better speed/efficacy tradeoffs:

w/ Sam Park Kristian Georgiev Andrew Ilyas Guillaume Leclerc 1/6

Our #NeurIPS2022 poster on in-context learning will be tomorrow (Thursday) at 4pm! Come talk to Shivam Garg and me at poster #928 🔥

LLMs can do in-context learning, but are they 'learning' new tasks or just retrieving ones seen during training? w/ Shivam Garg, Percy Liang, & Greg Valiant we study a simpler Q:

Can we train Transformers to learn simple function classes in-context? 🧵

arxiv.org/abs/2208.01066