Tirthankar Ghosal

@TirthankarSlg

Scientist @ORNL #NLProc #LLMs #peerreview #SDProc Editor @SIGIRForum Org. #AutoMin2023 @SDProc @wiesp_nlp AC @IJCAIconf @emnlpmeeting Prevly @ufal_cuni @IITPAT

ID:817603403677253633

https://member.acm.org/~tghosal 07-01-2017 05:26:56

3,4K Tweets

513 Followers

1,3K Following

A suggestion for an effective 11-step LLM summer study plan:

1) Read* Chapters 1 and 2 on implementing the data loading pipeline (manning.com/books/build-a-… & github.com/rasbt/LLMs-fro…).

2) Watch Karpathy's video on training a BPE tokenizer from scratch (youtube.com/watch?v=zduSFx…).

3)

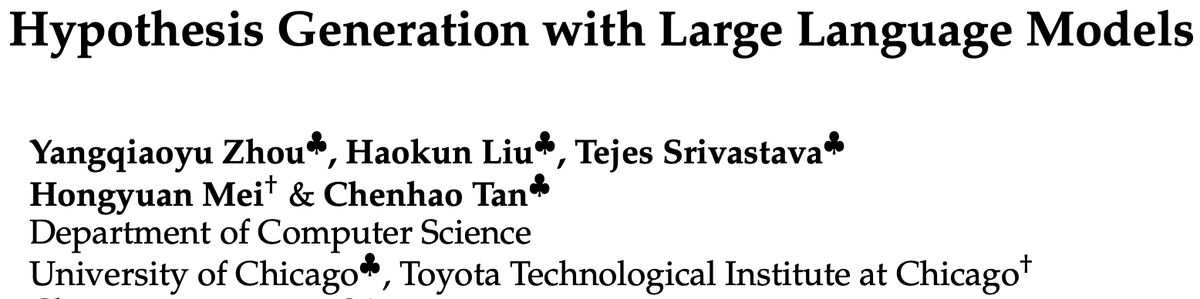

🔥Thrilled to introduce HypoGeniC: Hypothesis Generation with Large Language Models 🔥

How can LLMs systematically propose and verify hypotheses based on observations for #ScientificDiscovery ?

Read our paper to find out!

📄: arxiv.org/abs/2404.04326…

Details in 🧵 (1/n):

Hi #NLProc ! Happy to announce #DAGPap24 competition on detecting automatically generated scientific papers at Scholarly Document Processing Workshop @acl2024

👉 Competition starting Apr 2

👉 Submit your systems by Apr 30

👉 Monetary prizes for top 3 systems

👉 More info at sdproc.org/2024/sharedtas…