new portal. Produced by RLuis77

“I Can Make Your Peoples DANCE”

YOU CANT??? Kendrick Lamar

How?🤔

What does that say?🤔🤷🏽♂️

REALLY???

THEY MY PPL’S…

The Drums Never Lie…Kendrick Lamar

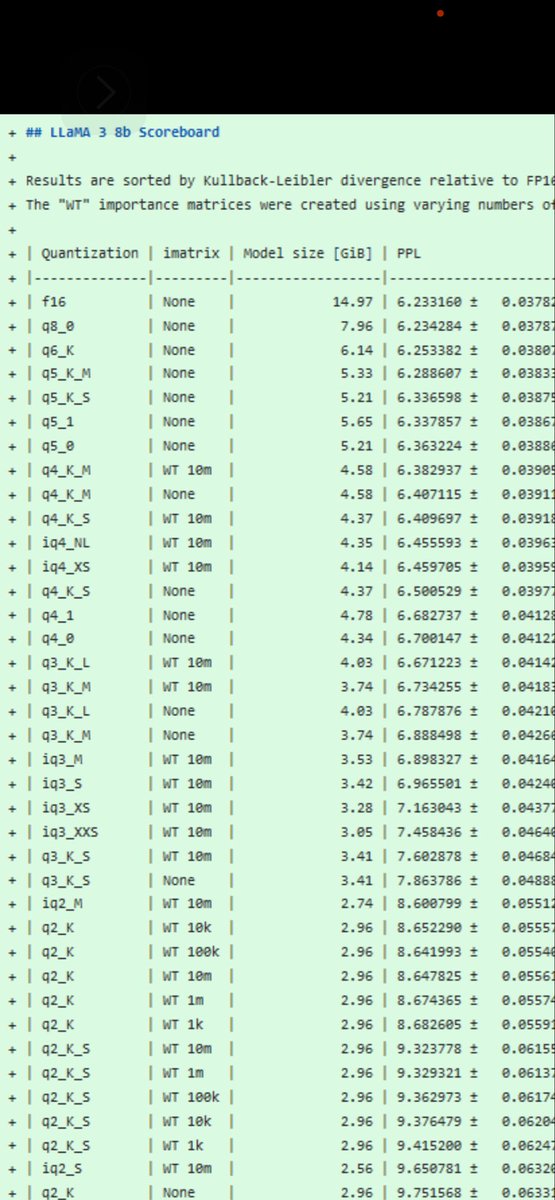

NO QUANTIZING

STRAIGHT SOUL.

Teortaxes▶️ Mmmmm not sure this is the case.

6.23 at f16 and 6.38 at 4bit q4km looks like it’s quantizing pretty good ?