Ben Edelman

@EdelmanBen

Final-year PhD candidate at Harvard CS trying to understand AI scientifically. New to the platform formerly known as Twitter.

ID:2383010678

https://www.benjaminedelman.com/ 11-03-2014 02:27:18

33 Tweets

113 Followers

20 Following

In new work (with Tian Qin, Nikhil Vyas, Boaz Barak, and Ben Edelman), we show that LLMs can identify their own epistemic uncertainty in free-form text, suggesting new approaches for combating hallucinations. (1/6)

Paper: arxiv.org/abs/2402.03563

Blog: bit.ly/3U2emAP

New Deeper Learning blog post: a linear probe can unlock LM's metacognitive capability to distinguish tokens that are 'knowable' from tokens where its predictions can't be improved. bit.ly/3U2emAP

Gustaf Ahdritz, Tian Qin, Nikhil Vyas, Boaz Barak, Ben Edelman

#AI #LLM

Two (out of 8) things that Sam Bowman wants you to know about LLMs:

(i) LLMs predictably get more capable with increasing investment

(ii) Many important LLM behaviors emerge unpredictably How can we get ahead of the curve and predict these ‘unpredictable’ behaviors?🧵⬇️

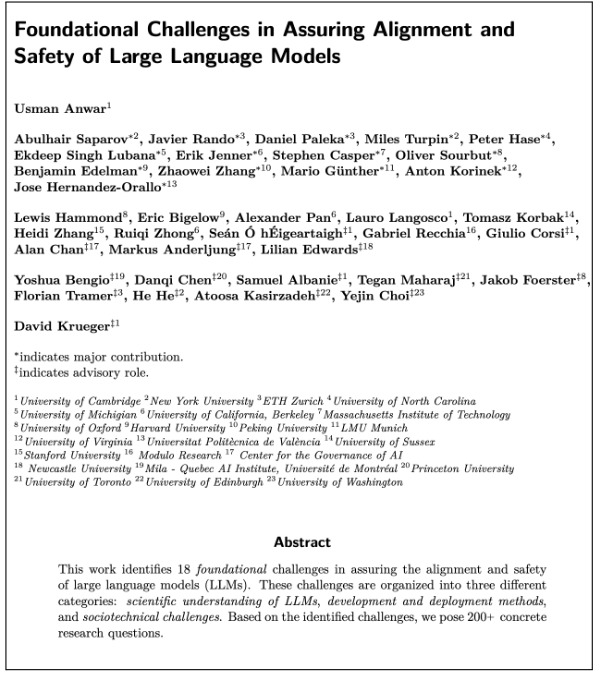

I’m super excited to release our 100+ page collaborative agenda - led by Usman Anwar - on “Foundational Challenges In Assuring Alignment and Safety of LLMs” alongside 35+ co-authors from NLP, ML, and AI Safety communities!

Some highlights below...

New paper on understanding the evolution of induction heads using a clean task of in-context learning Markov chains. Phase transitions, cool theory and videos! Check it out!

Shout-out to Ezra Edelman (first PhD paper!) and Nikos, for leading this work!

How do induction heads / in-context learning emerge? We study a new synthetic task to better understand how these circuits evolve (in stages!) over time.

w/ Ben Edelman, Surbhi Goel, Eran Malach & Nikos Tsilivis

Blog: unprovenalgos.github.io/statistical-in…

Paper: arxiv.org/abs/2402.11004 (1/7)