Gregor Bachmann

@GregorBachmann1

I am a PhD student @ETH Zürich working on deep learning. MLP-pilled 💊.

https://t.co/yWdDEV6Z15

ID:1527256391806746624

19-05-2022 11:54:49

84 Tweets

230 Followers

272 Following

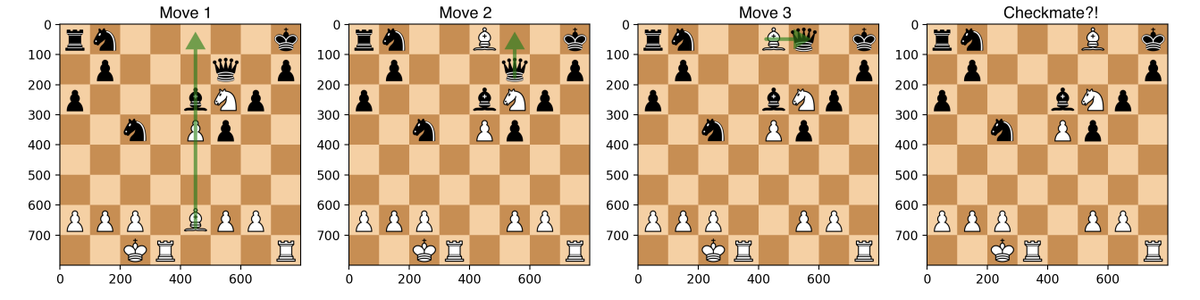

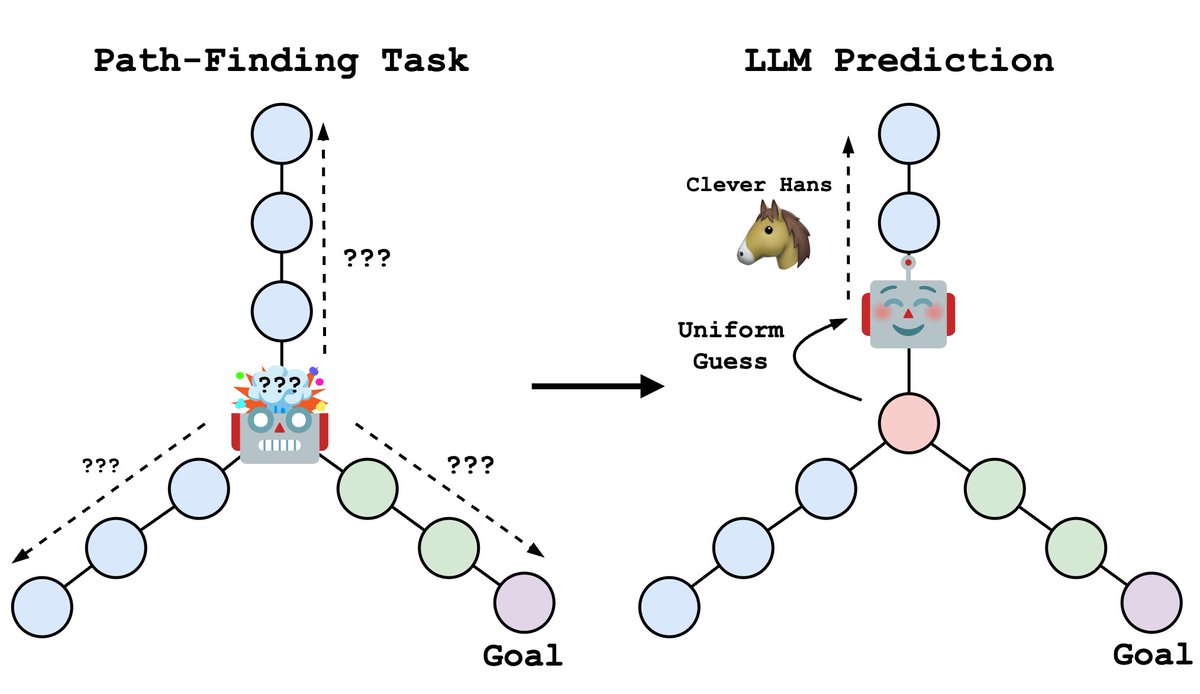

From stochastic parrot 🦜 to Clever Hans 🐴? In our work with Vaishnavh Nagarajan we carefully analyse the debate surrounding next-token prediction and identify a new failure of LLMs due to teacher-forcing 👨🏻🎓! Check out our work arxiv.org/abs/2403.06963 and the linked thread!

🗣️ “Next-token predictors can’t plan!” ⚔️ “False! Every distribution is expressible as product of next-token probabilities!” 🗣️

In work w/ Gregor Bachmann , we carefully flesh out this emerging, fragmented debate & articulate a key new failure. 🔴 arxiv.org/abs/2403.06963

Why in neural networks the learning rate can transfer from small to large models (both in width and depth)? It turns out that the sharpness dynamics can explain it. Check out our new work! arxiv.org/abs/2402.17457

w/ Alex Meterez (co-first),

Antonio Orvieto and T. Hofmann

🌟 Excited to present LIME, localized image editing via cross-attention regularization without extra data, re-training, or fine-tuning!

Collaboration with Alessio Tonioni, Yongqin Xian, Thomas Hofmann, Federico Tombari

📄 Paper: arxiv.org/pdf/2312.09256

🔗 Project: enisimsar.github.io/LIME

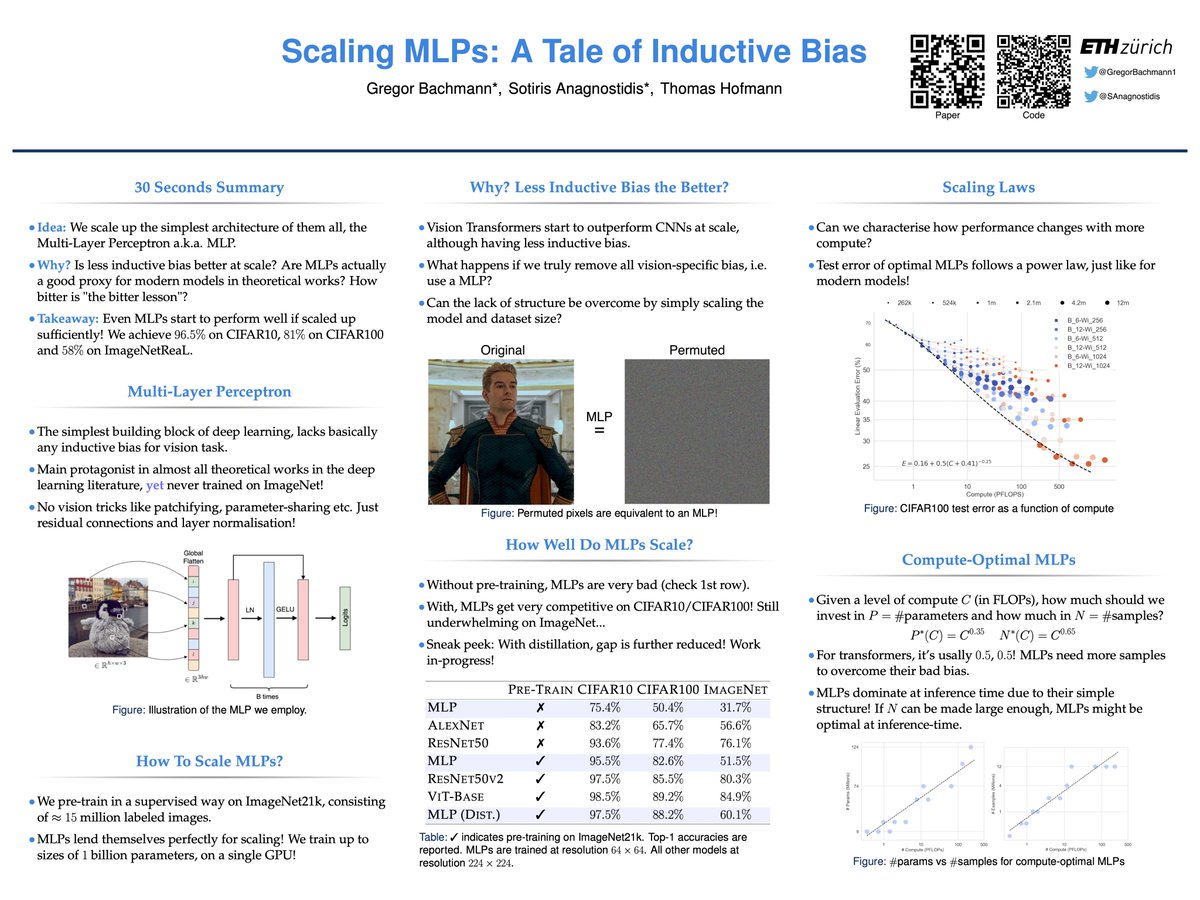

I’ll be presenting 'Scaling MLPs' at #NeurIPS2023 , tomorrow (Wed) at 10:45am!

Hyped to discuss things like inductive bias, the bitter lesson, compute-optimality and scaling laws 👷⚖️📈

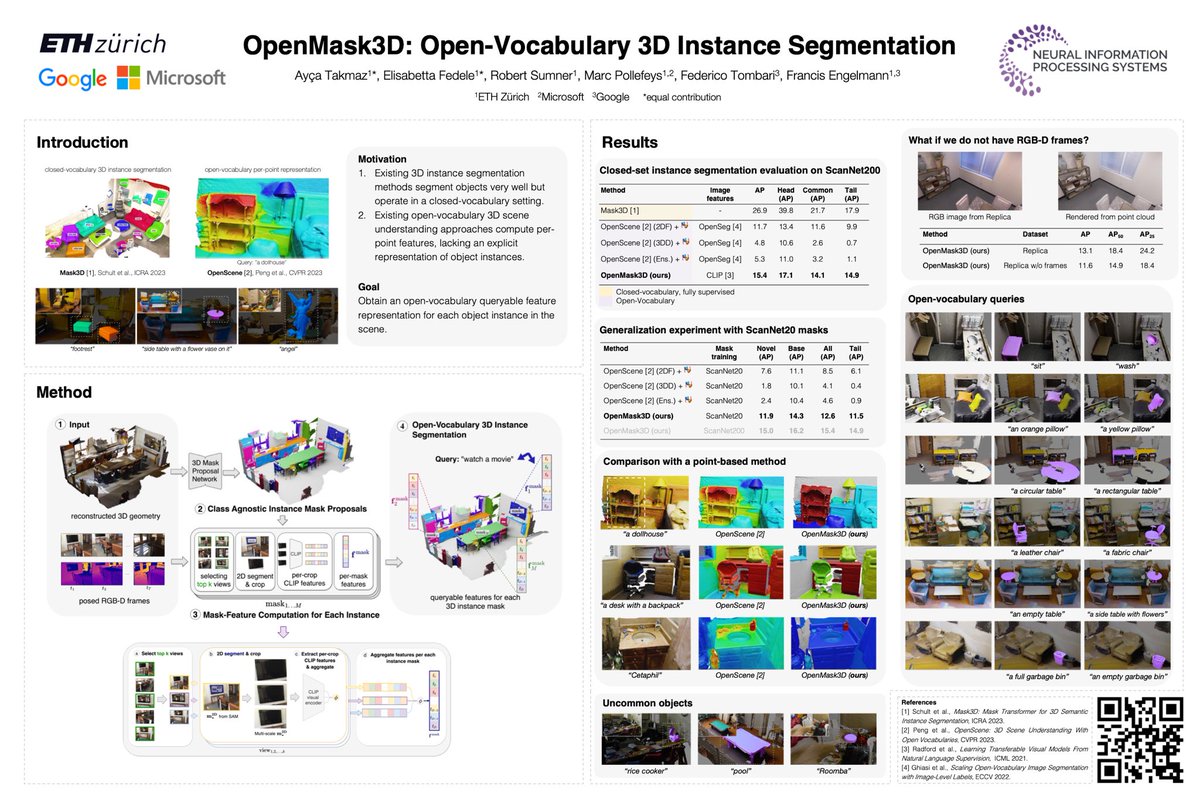

Today Elisabetta Fedele and I will present our work OpenMask3D at NeurIPS Conference 🎷

Visit our poster to learn more about OpenMask3D or to chat with us!

📍 Great Hall & Hall B1+B2 (level 1) #906

🕰️ 10:45-12:45

🌎 openmask3d.github.io

Francis Engelmann Federico Tombari Marc Pollefeys

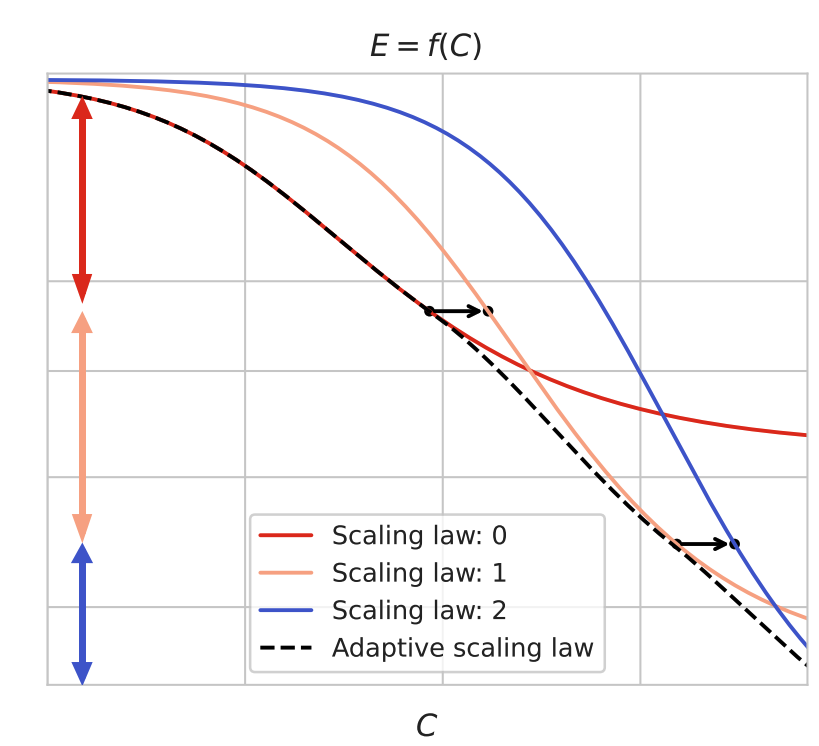

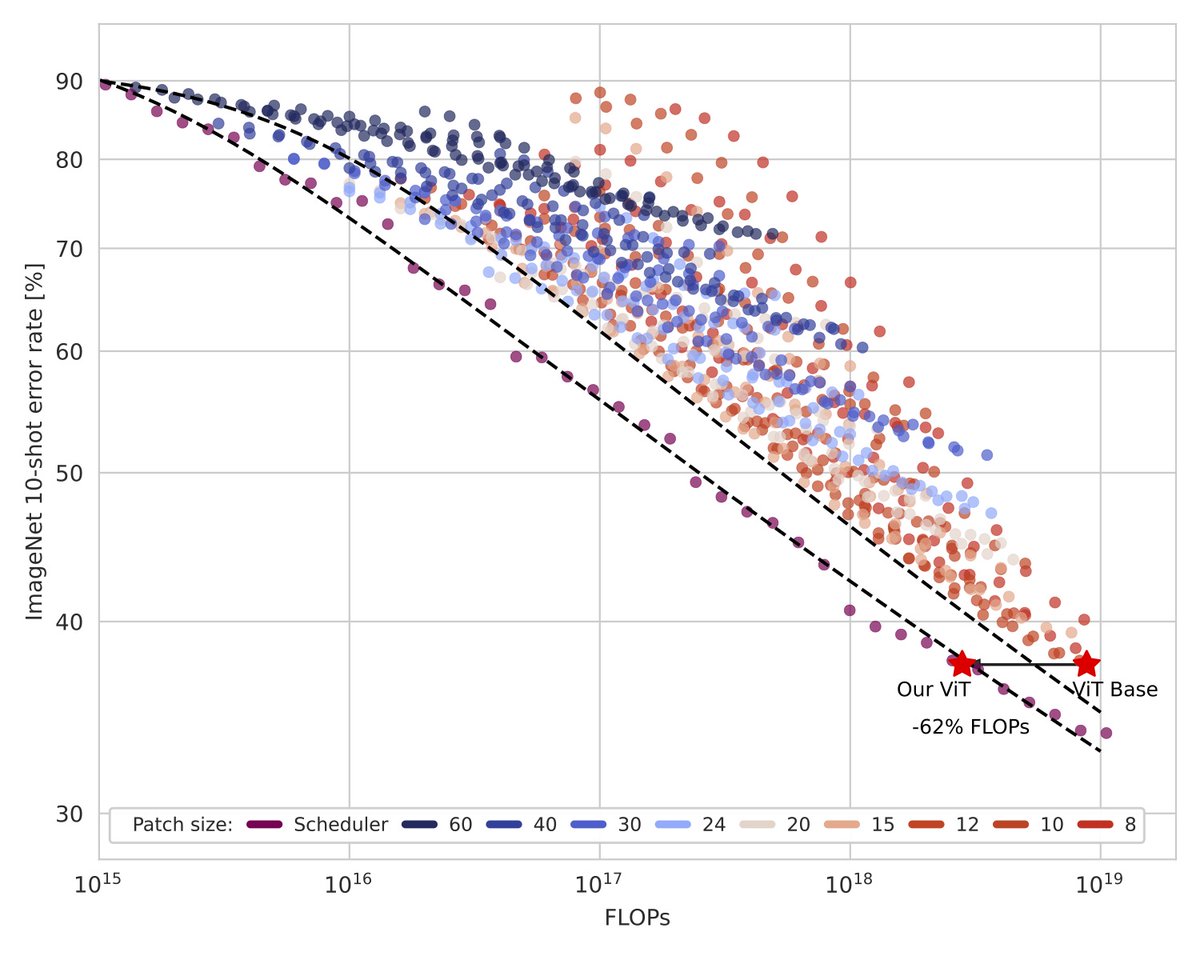

Want to train a compute-optimal model but get there faster?

Try shape-adaptive training and follow the optimal curve for different “shape' configurations 🏎️💨!

Check-out Sotiris Anagnostidis and my work for more!

📝arxiv.org/abs/2311.03233

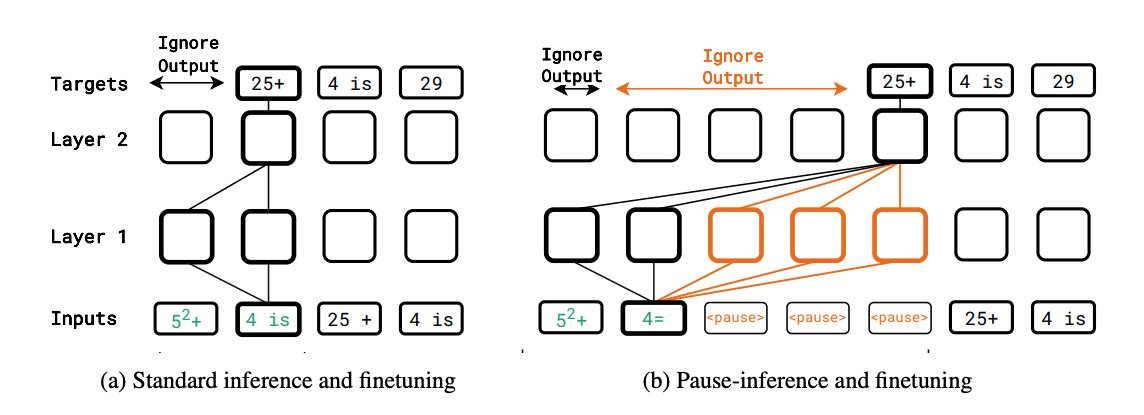

Isn’t it arbitrary that a Transformer must produce the K+1'th token by attending to only K vectors in each layer?

In work led by Sachin Goyal, we explore a way to break this rule: by appending copies of a *single* “pause” token to delay the output.

arxiv.org/abs/2310.02226 1/

I had an argument with Preetum Nakkiran about MLPs 4 years ago. He said with enough data + compute the MLP/ConvNet gap would go to 0. I was convolution-pilled and convinced this wasn't possible. He was right: arxiv.org/abs/2306.13575

We will be at #ICCV2023 to present Human3D 🧑🤝🧑!

📌Poster: Wednesday, October 4th - 10:30-12:30, Paper ID 4949 - Room 'Nord' - 103

Project page: human-3d.github.io

Code & data: github.com/human-3d

Jonas Schult Irem Kaftan Mertcan Akçay Francis Engelmann Siyu Tang @VLG-ETHZ