Jacob Steinhardt

@JacobSteinhardt

Assistant Professor of Statistics, UC Berkeley

ID:438570403

16-12-2011 19:04:34

323 Tweets

7,1K Followers

67 Following

In a new preprint with Jarek Blasiok, Rares Buhai, David Steurer, we show a surprisingly simple greedy algorithm that can list decode planted cliques in the semirandom model at k~sqrt n log^2 n --essentially optimal up to log^2 n. This ~resolves Jacob Steinhardt's open question.

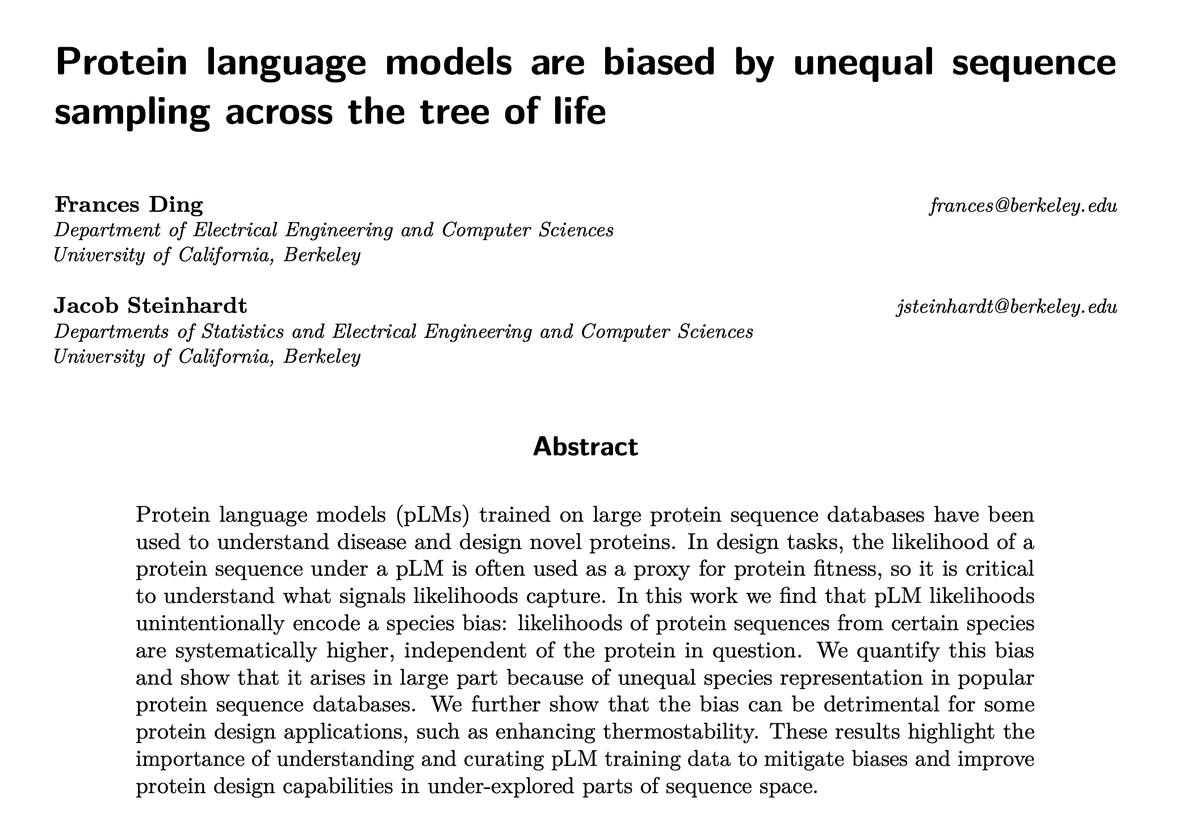

Protein language models (pLMs) can give protein sequences likelihood scores, which are commonly used as a proxy for fitness in protein engineering. But what do likelihoods encode?

In a new paper (w/ Jacob Steinhardt) we find that pLM likelihoods have a strong species bias!

1/

![Yossi Gandelsman (@YGandelsman) on Twitter photo 2024-01-17 03:47:16 Accepted to oral #ICLR2024! *Interpreting CLIP's Image Representation via Text-Based Decomposition* CLIP produces image representations that are useful for various downstream tasks. But what information is actually encoded in these representations? [1/8] Accepted to oral #ICLR2024! *Interpreting CLIP's Image Representation via Text-Based Decomposition* CLIP produces image representations that are useful for various downstream tasks. But what information is actually encoded in these representations? [1/8]](https://pbs.twimg.com/media/GEA-mXWboAAWicX.jpg)