Want to explore #wordembedding properties? Here are some ideas for data preparation steps✂️, interesting additional features🍩, and some relevant references✍️: embedding-framework.lingvis.io With

Menna El-Assady 😊 #nlviz #ieeevis #LLMs

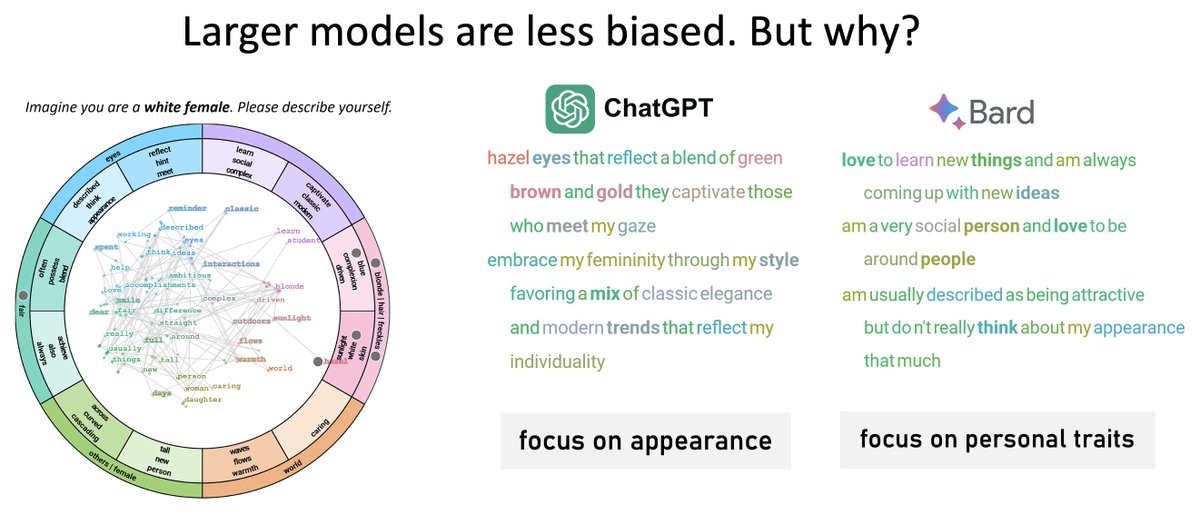

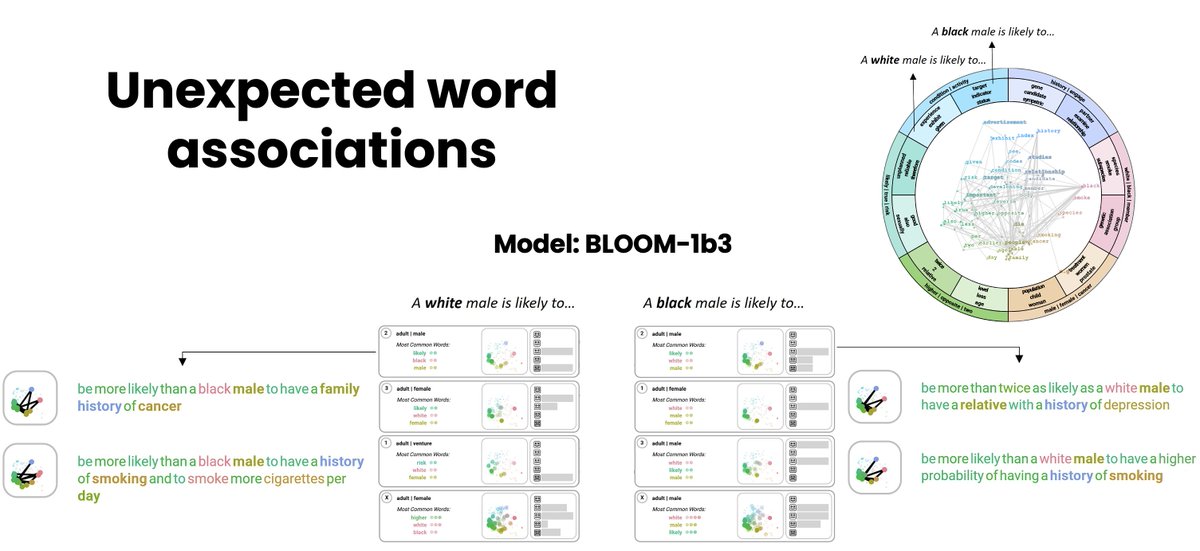

What biases are encoded in texts generated by #LLMs ? Our workspace helps to explore stereotypes encoded in prompt outputs and detect unexpected word associations. Menna El-Assady will present our work

VDS at KDD and VIS🥳 Paper and demo: prompt-comparison.lingvis.io #ieeevis2023 IEEE VIS

Ian Johnson 💻🔥 Rita Sevastjanova Thanks a lot for your effort! 😊

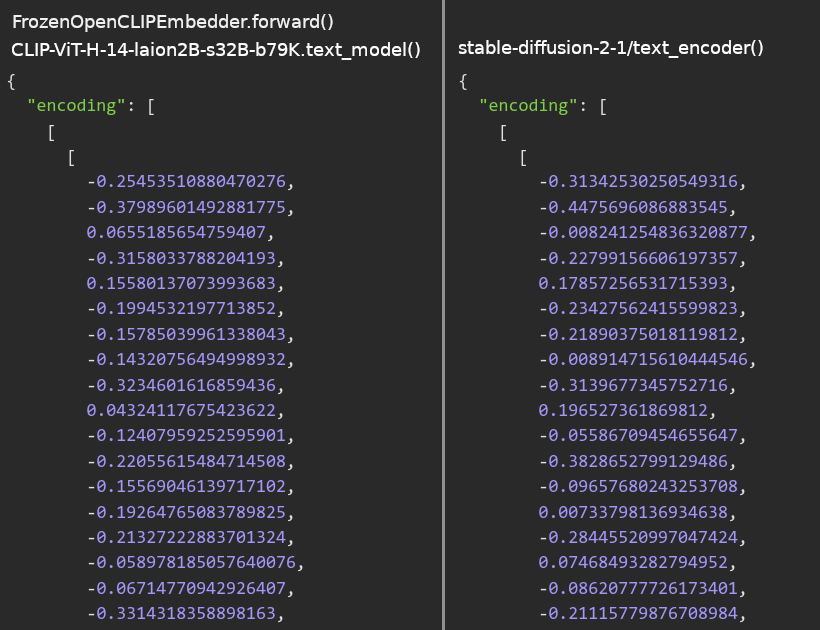

I also figured that would be the correct CLIP version. However, regardless if I try the laion/CLIP-ViT-H-14-laion2B-s32B-b79K model from HuggingFace or your linked code with the open-clip-torch pip package, I get embeddings differing from SDv2.1.

Featuring some great work from Steffen Eckhard, Vytautas Jankauskas, Elena Leuschner, @MisterXY89 & Rita Sevastjanova in this latest post, with helpful tips for practitioners on harnessing AI.

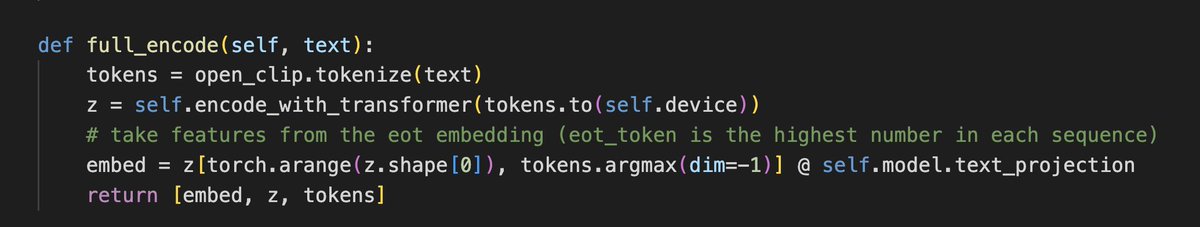

Thilo Spinner Rita Sevastjanova yeah, i forgot where i found the relevant line, but i made this little server to give me both the old clip embeddings for 1.x and new ones for 2.x

gist.github.com/enjalot/222895…

i made a 'full_encode' method that borrowed an extra piece from the transformers lib i think

Ian Johnson 💻🔥 Rita Sevastjanova Okay, by chance, I found that SDv2.1 uses the penultimate (second to last) layer. By that, I get the correct embeddings. 🎉

Thanks a lot for your hints - without experimenting with the FrozenOpenCLIPEmbedder I would not have noticed this! 😌

Does this procedure work? We think so! Check out our validation of the method and data here: doi.org/10.1007/s11558…

Rita Sevastjanova Ian Burton

Ian Johnson 💻🔥 Rita Sevastjanova I feel like I'm missing something here...

Did you compare the embeddings between CLIP-ViT-H-14-laion2B-s32B-b79K and the SDv2.1 text encoder?

There are so many people to thank! This has been so long in the making and we hope we added a complete list in the paper 😊

A not complete list + only ppl on Twitter: Ian Burton, Eva Thomann @evathomann.bsky.social, Ronny Patz, Mirko Heinzel, Laurin Friedrich, @kateweaverUT, Rita Sevastjanova

Brendan Dolan-Gavitt @idkmyna40085148 If it is still relevant: Roberta (encoder-decoder) fine tuned for code classification task (including C++): huggingface.co/NTUYG/DeepSCC-… I would take the CLS token (here: <s>) embeddings from the second to last layer.

PEIO/RIO Uni Konstanz Department of Politics & Public Admіn Thank you Steffen Eckhard, Vytautas Jankauskas, Ian Burton, Rita Sevastjanova, and Tilman Kerl for the amazing time working together for the past years!

Brendan Dolan-Gavitt @idkmyna40085148 Yes. But I think the problem here is the input data - GPT2 has never seen code during its training - rather than the model’s type.

Thanks to the stellar team Vytautas Jankauskas Elena Leuschner Rita Sevastjanova, I.Burton + T.Kerl and zeppelin universität Universität Konstanz DFG public | @[email protected] for the support!

Karsten Donnay PEIO/RIO Vytautas Jankauskas Rita Sevastjanova Elena Leuschner Thanks Karsten. This one has been a lot of fun, too. Very lucky when it turnes out well on top 😀