Yejin Choi

@YejinChoinka

professor at UW, director at AI2, adventurer at heart

ID:893882282175471616

https://homes.cs.washington.edu/~yejin/ 05-08-2017 17:11:58

1,6K Tweets

18,6K Followers

330 Following

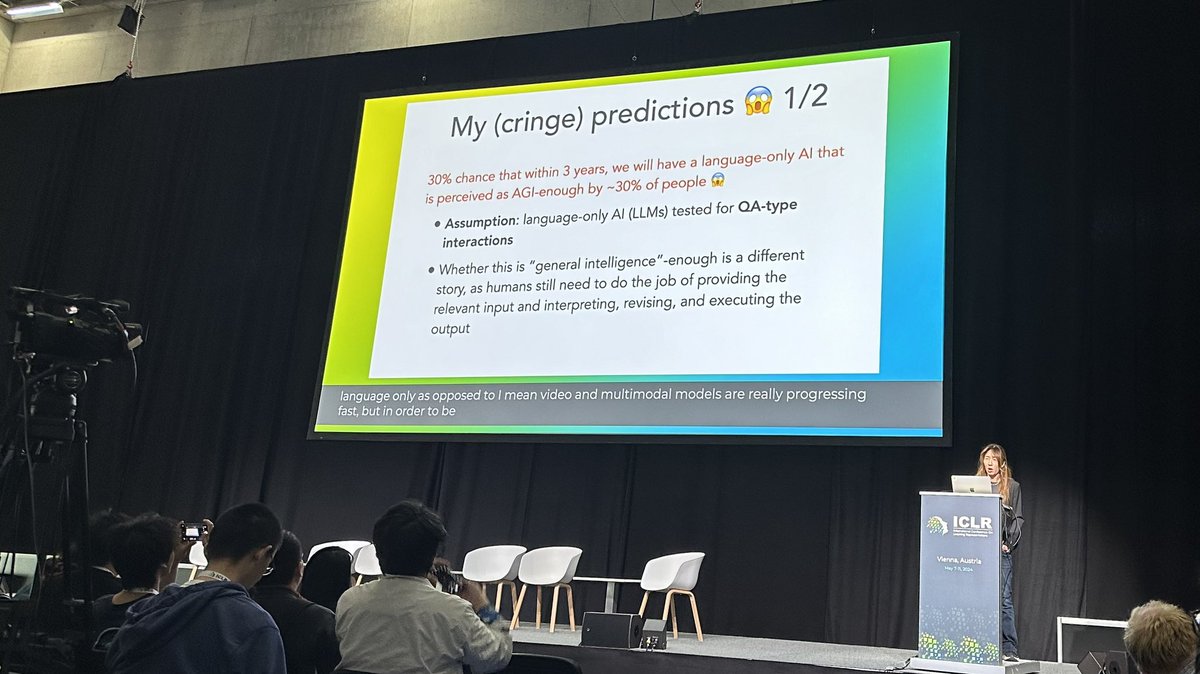

.Yejin Choi makes a prediction that I can get behind: “30% chance that within 3 years, we will have a language-only Al that is perceived as AGl-enough by ~30% of people”. This seems right. People—including scientists—easily (over-)attribute intelligence to machines.

We created reviewing guidelines for Conference on Language Modeling. Not intended to automate the committee work, or dictate constraints. But, to inspire a thoughtful reviewing process, for an exciting and impactful program of the highest possible quality. We have a wonderful program committee ❤️

We took this on Day2 of #TED2024 .

Some #AI ROCK⭐️'s...Fei-Fei Li Daniela Rus MIT CSAIL Helen Toner Yejin Choi ruchowdh.bsky.social Niceaunties. And speaking today Dr. Catie Cuan + Prof. Anima Anandkumar

...oh...and then there's me🤣

I’m super excited to release our 100+ page collaborative agenda - led by Usman Anwar - on “Foundational Challenges In Assuring Alignment and Safety of LLMs” alongside 35+ co-authors from NLP, ML, and AI Safety communities!

Some highlights below...

I will be talking about what differential privacy is, what it is not and what some common misconceptions are in privacy for generative AI in a couple hours The GenLaw Center in DC!

Join us on the live stream: tinyurl.com/genlaw-stream

Slides: tinyurl.com/genlaw-dp-2024

🥰Excited to share that I will be joining AI2 Allen Institute for AI MOSAIC this September as a predoctoral young investigator!! So excited to continue working with amazing Yejin Choi Nouha Dziri Liwei Jiang Kavel Rao and can't wait to collaborate with others!

The infini-gram paper is updated with the incredible feedback from the online community 🧡 We added references to papers of Jeff Dean (@🏡) Yee Whye Teh Ehsan Shareghi Edward Raff et al.

arxiv.org/abs/2401.17377

Also happy to share that the infini-gram API has served 30 million queries!