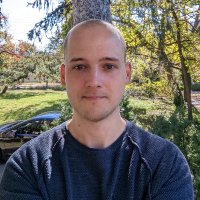

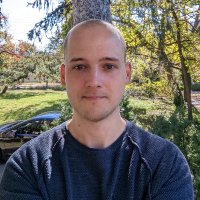

Jonas Geiping

@jonasgeiping

Machine Learning Research at the ELLIS Institute & MPI-IS // Investigating fundamental questions in Safety, Security, Privacy & Efficiency of modern ML

ID:1443639893083758598

https://jonasgeiping.github.io/ 30-09-2021 18:12:52

328 Tweets

1,6K Followers

612 Following

🎙 The second episode of the Cyber Valley Podcast with our Principal Investigator Jonas Geiping is now available🚀Tune in to learn about Safety and Efficiency of AI.

👉 Check it out: institute-tue.ellis.eu/en/news/cyber-…

🎙 The first episode of the Cyber Valley Podcast with our Principal Investigators is now out! 🚀 @Orvieto_Antonio #AI Podcast #AI Research #AI

🔗 Learn more: institute-tue.ellis.eu/en/news/cyber-…

🚀 Get ready to dive deep into the captivating world of artificial intelligence with us!

The Cyber Valley Podcast coming soon...

🎙️ Don’t miss our unforgettable episodes, created in collaboration with the ELLIS Institute Tübingen #AI Podcast #AI Research #ELLIS #AI Antonio Orvieto

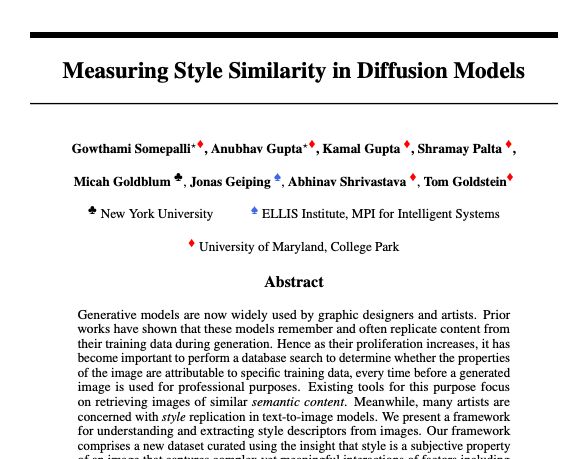

How can we define and how can we compare style info in generated images?

For example when trying to figure out if a generated image copies an existing art style by accident?

Gowthami Somepalli led our recent investigation about new models and data for this purpose, summarized here:

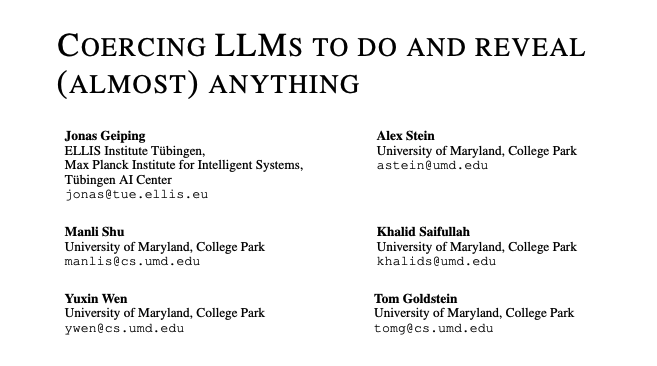

Had a very interesting chat with Sam Charrington for the The TWIML AI Podcast podcast recently, broadly about adversarial attacks on LLMs.

I'm long overdue to post a thread about this research and all the ways of coercing LLMs to do and reveal (almost) anything, I'll get to it tomorrow!

Excited to share our latest paper on CLIP model inversion uncovering surprising NSFW image occurrences and more! Heartfelt thanks to all my amazing collaborators! ♥️Atoosa Chegini Jonas Geiping Soheil Feizi Tom Goldstein

Paper: huggingface.co/papers/2403.02…

Now accepted in Transactions on Machine Learning Research (TMLR) Transactions on Machine Learning Research

Thank you team

Dinesh Manocha Furong Huang Souradip Chakraborty Jonas Geiping Soumya Jana Soumya Suvra Ghosal

UMD Department of Computer Science GAMMA UMD

I am at #NeurIPS2023 now.

I am also on the academic job market, and humbled to be selected as a 2023 EECS Rising Star✨. I work on ML security, privacy & data transparency.

Appreciate any reposts & happy to chat in person! CV+statements: tinyurl.com/yangsibo

Find me at ⬇️