Katherine Tian

@kattian_

cs/stat @harvard, working on calibration & factuality of LLMs, prev @GoogleAI tensorflow, golden state @warriors fan

ID:951151284735827968

https://kttian.github.io/ 10-01-2018 17:58:32

127 Tweets

712 Followers

494 Following

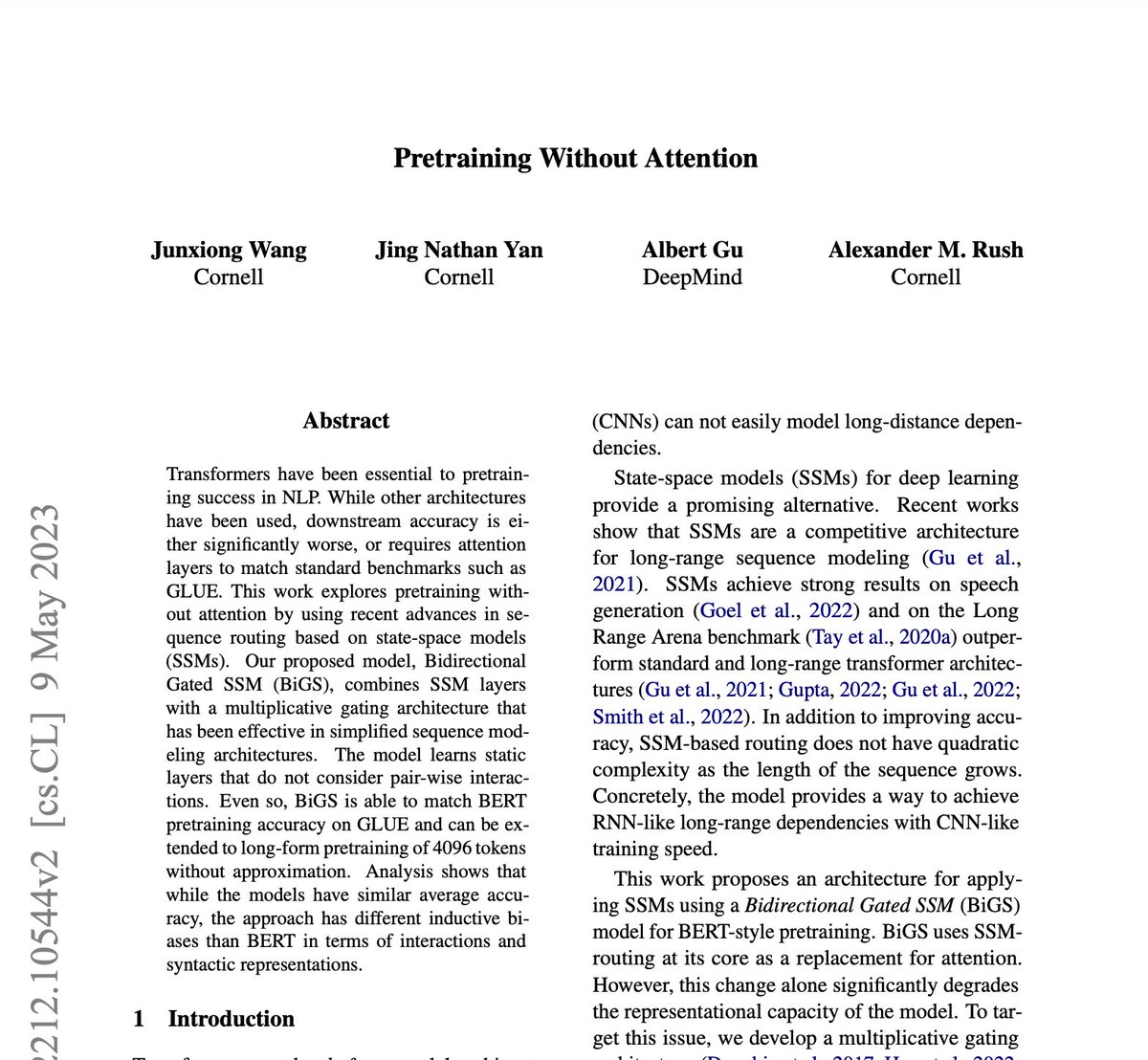

Come by the #NeurIPS2023 Instruction Following workshop (room 220-222) to see our work on:

*Emulated fine-tuning*: RLHF without fine-tuning!

*Fine-tuning for factuality*: how to fine-tune LLMs directly for factuality, reducing hallucination by >50%

RIGHT NOW!!!

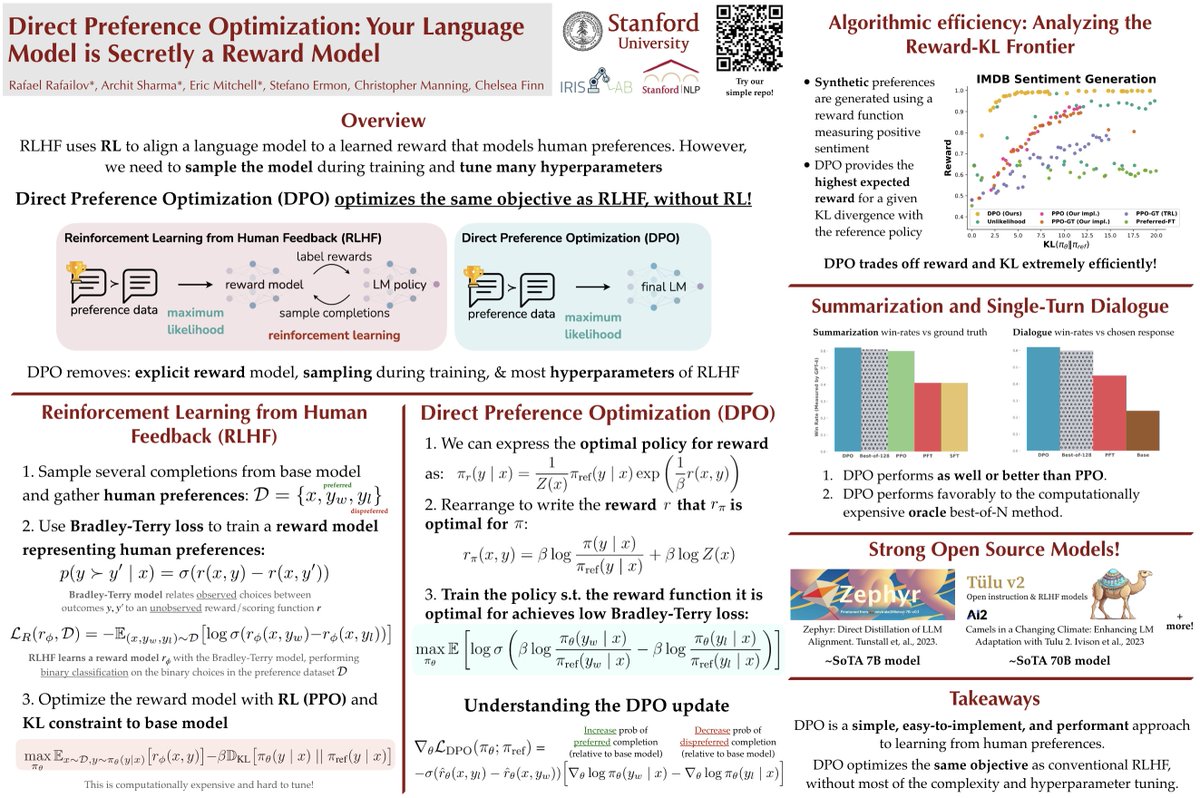

DPO is a runner up for NeurIPS outstanding paper. 🙌

Big congrats especially to the students Rafael Rafailov Archit Sharma Eric & the other awardees.

If you haven't learned about DPO already, check out the oral & poster 👇 on Thurs afternoon.

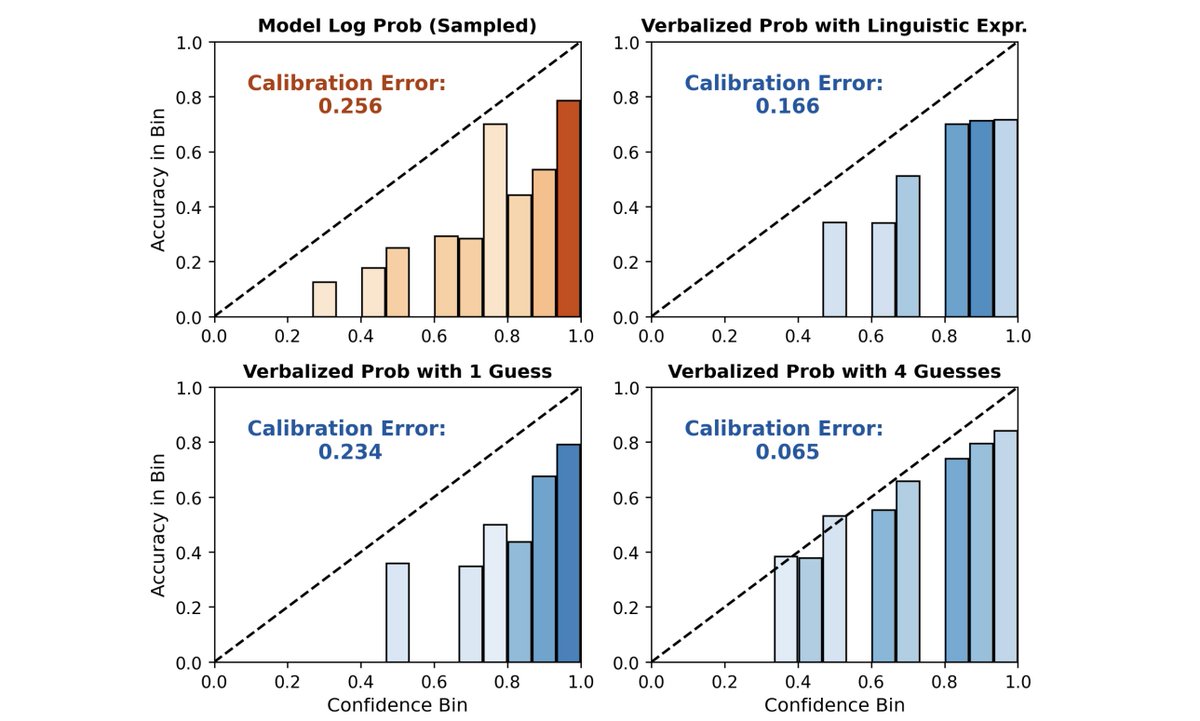

Come see Katherine Tian @ ICLR 🇦🇹's work on the ability of RLHF'd LLMs to *directly verbalize* probabilities (yes that's right as tokens) that are actually pretty-well calibrated! (Usually than the log probs!!! 🤯🤯🤯)

Poster 14B

R I G H T N O W until 3:30 SG time!!

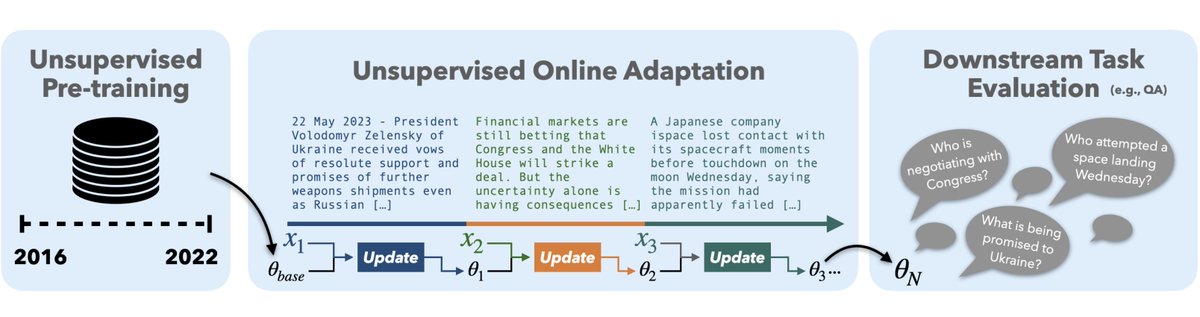

LLMs fine-tuned with RLHF are known to be poorly calibrated.

We found that they can actually be quite good at *verbalizing* their confidence.

Led by Katherine Tian @ ICLR 🇦🇹 and Eric, at #EMNLP2023 this week.

Paper: arxiv.org/abs/2305.14975