Miles Turpin

@milesaturpin

Language model alignment @nyuniversity

ID:865609028579213312

http://milesturp.in/about 19-05-2017 16:44:09

365 Tweets

988 Followers

1,3K Following

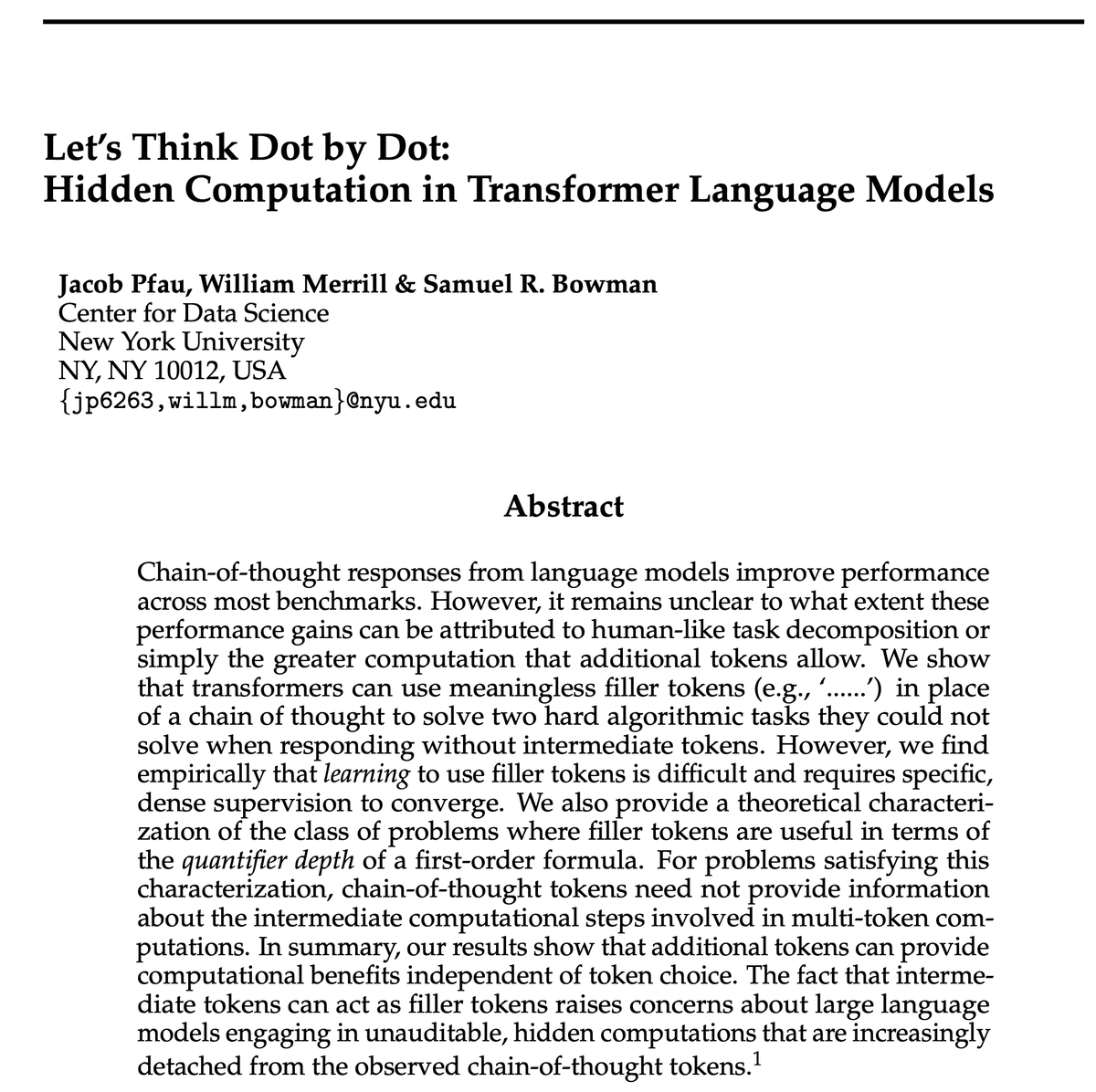

Is GPQA garbage?

A couple weeks ago, typedfemale pointed out some mistakes in a GPQA question, so I figured this would be a good opportunity to discuss how we interpret benchmark scores, and what our goals should be when creating benchmarks.

🚨📄 Following up on 'LMs Don't Always Say What They Think', Miles Turpin et al. now have an intervention that dramatically reduces the problem! 📄🚨

It's not a perfect solution, but it's a simple method with few assumptions and it generalizes *much* better than I'd expected.