Nikolas Adaloglou (black0017)

@nadaloglou

Making AI intuitive at https://t.co/1bgNHk9oRB || Human-centered PhD AI researcher || Book: https://t.co/WYKwPuBepT || AI Course: https://t.co/TH3jpaNqJb

ID:1154385257581436928

https://github.com/black0017 25-07-2019 13:37:47

1,3K Tweets

1,0K Followers

418 Following

[AI Summer Learning Mondays] - Transformers in computer vision: ViT architectures, tips, tricks and improvements | AI Summer hubs.ly/Q026ln_50 #MachineLearning #DeepLearning #ai #ai summer #machinelearning #artificialintelligence #python

Deep learning on computational biology and bioinformatics tutorial: from DNA to protein folding and alphafold2 | AI Summer hubs.ly/Q026lq9z0 #MachineLearning #DeepLearning #ai #ai summer #machinelearning #artificialintelligence #python

Learn how to perform 3D Medical image segmentation with transformers by Nikolas Adaloglou (black0017) hubs.ly/Q026lpNL0 #MachineLearning #DeepLearning #ai #ai summer #machinelearning #artificialintelligence #python

[AI Summer Learning Mondays] - Grokking self-supervised (representation) learning: how it works in computer vision and why | AI Summer hubs.ly/Q026lp3T0 #MachineLearning #DeepLearning #ai #ai summer #machinelearning #artificialintelligence #python

Introduction to medical image processing with Python: CT lung and vessel segmentation without labels by Nikolas Adaloglou (Nikolas Adaloglou (black0017)) hubs.ly/Q026lp6G0 #MachineLearning #DeepLearning #ai #ai summer #machinelearning #artificialintelligence #python

Deep Learning in Production by Sergios Karagiannakos is on sale on Leanpub! Its suggested price is $25.00; get it for $10.50 with this coupon: leanpub.com/sh/4e2jkFOD Sergios Karagiannakos #MachineLearning #GoogleCloudPlatform #Ai #SoftwareArchitecture #Python #Docker

Understanding Vision Transformers (ViTs): Hidden properties, insights, and robustness of their representations theaisummer.com/vit-properties/

#AI #DeepLearning #MachineLearning #DataScience

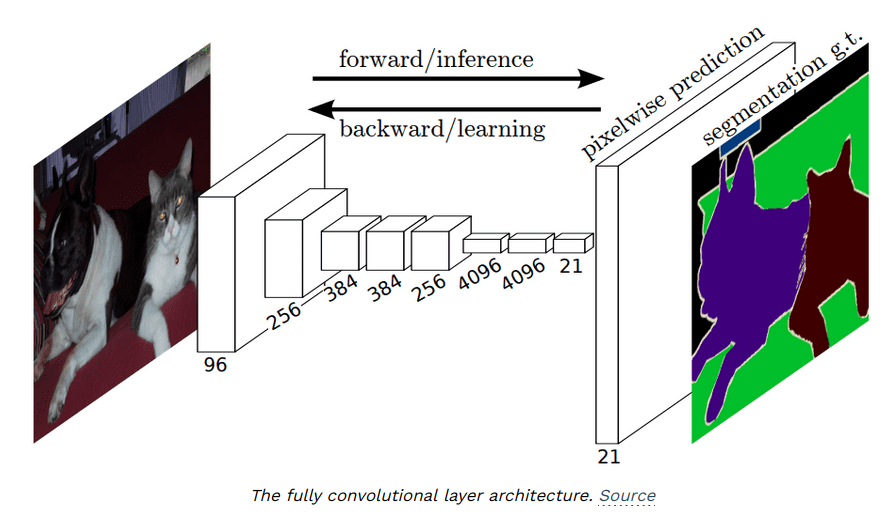

An overview of Unet architectures for semantic segmentation and biomedical image segmentation by Nikolas Adaloglou (Nikolas Adaloglou (black0017)) hubs.ly/Q026lpkw0 #MachineLearning #DeepLearning #ai #ai summer #machinelearning #artificialintelligence #python

[AI Summer Learning Mondays] - Learn Pytorch Today: Training your first deep learning models step by step | AI Summer hubs.ly/Q026lpDf0 #MachineLearning #DeepLearning #ai #ai summer #machinelearning #artificialintelligence #python

[AI Summer Learning Mondays] - Kullback-Leibler Divergence Explained hubs.ly/Q026lpCn0 #MachineLearning #DeepLearning #ai #ai summer #machinelearning #artificialintelligence #python

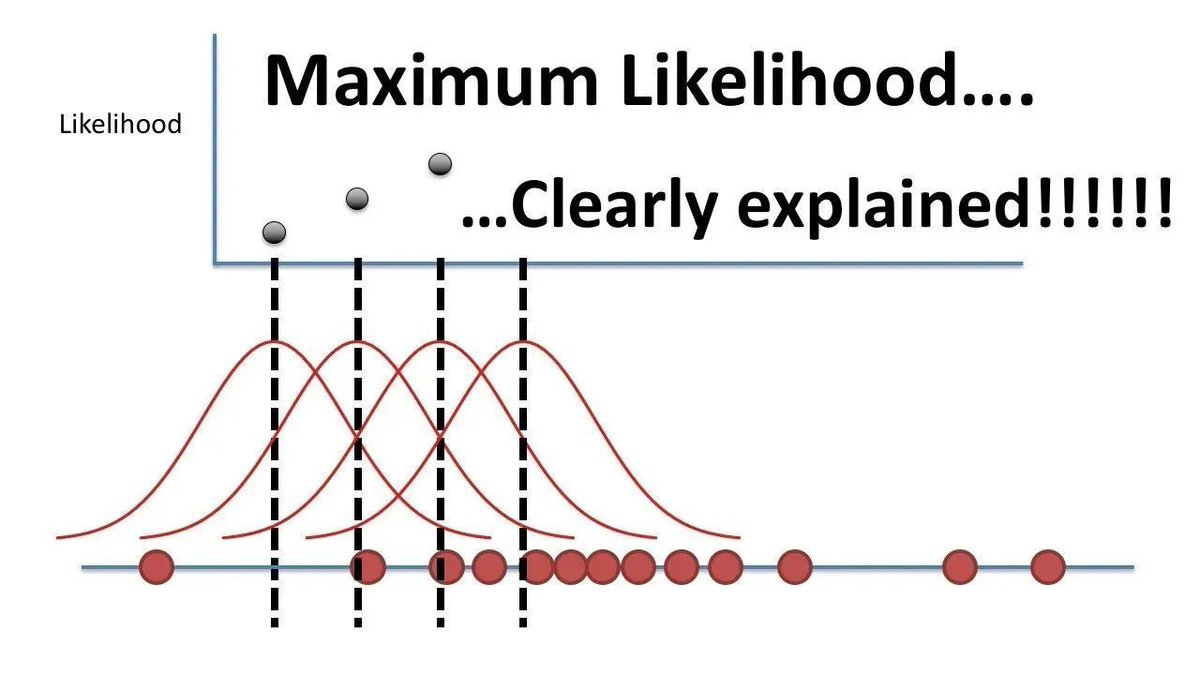

Understanding Maximum Likelihood Estimation in Supervised Learning

buff.ly/42xN4Fw v/ AI Summer

#AI #MachineLearning #DataScience

Cc Carla aka Data Nerd (#PEACE not war) 🎶 Kirk Borne Eric Gaubert Yann Marchand Nicolas Babin Fabrizio Bustamante