Pratyush Maini

@pratyushmaini

Trustworthy ML | PhD student @mldcmu | Founding Member @datologyai | Prev. Comp Sc @iitdelhi

ID:1191440736517939200

http://pratyushmaini.github.io 04-11-2019 19:43:22

296 Tweets

1,1K Followers

346 Following

It's Monday, my dudes, which means we're going to highlight some great data research that enables everyone to be a datologist.

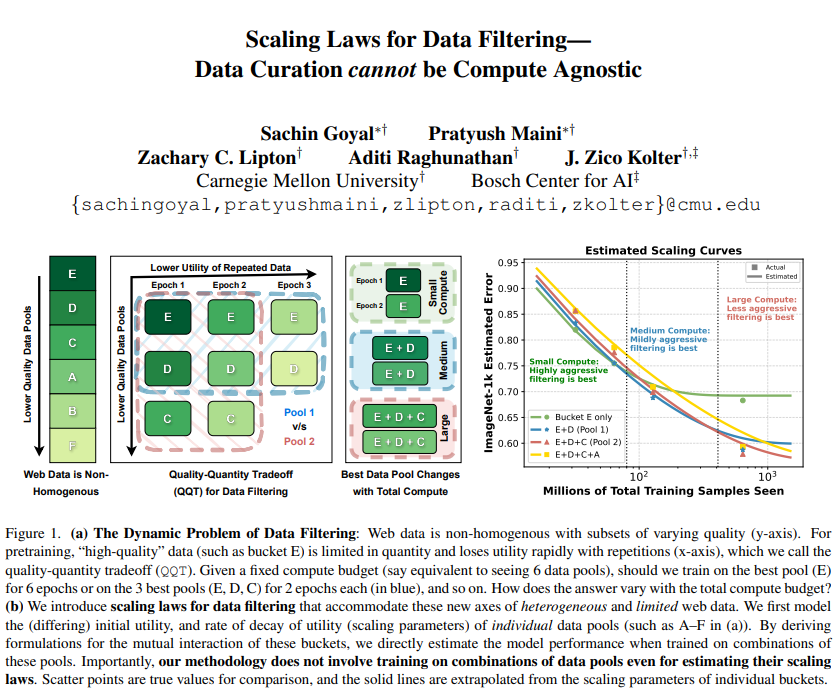

Today we're highlighting amazing work from our very own Pratyush Maini: Scaling Laws for Data Filtering.

tl;dr: in a finite data regime (i.e. compute…

💎💎 dropped by Sachin Goyal on the challenges of developing new scaling laws in academia, and that too with just ~10k GPU hours. Every training decision involved sooo much discussion because we had to choose our runs very miserly & wisely :)

How do you balance repeat training on high quality data versus adding more low quality data to the mix? And how much do you train on each type? Pratyush Maini and Sachin Goyal provide scaling laws for such settings. Really excited about the work!

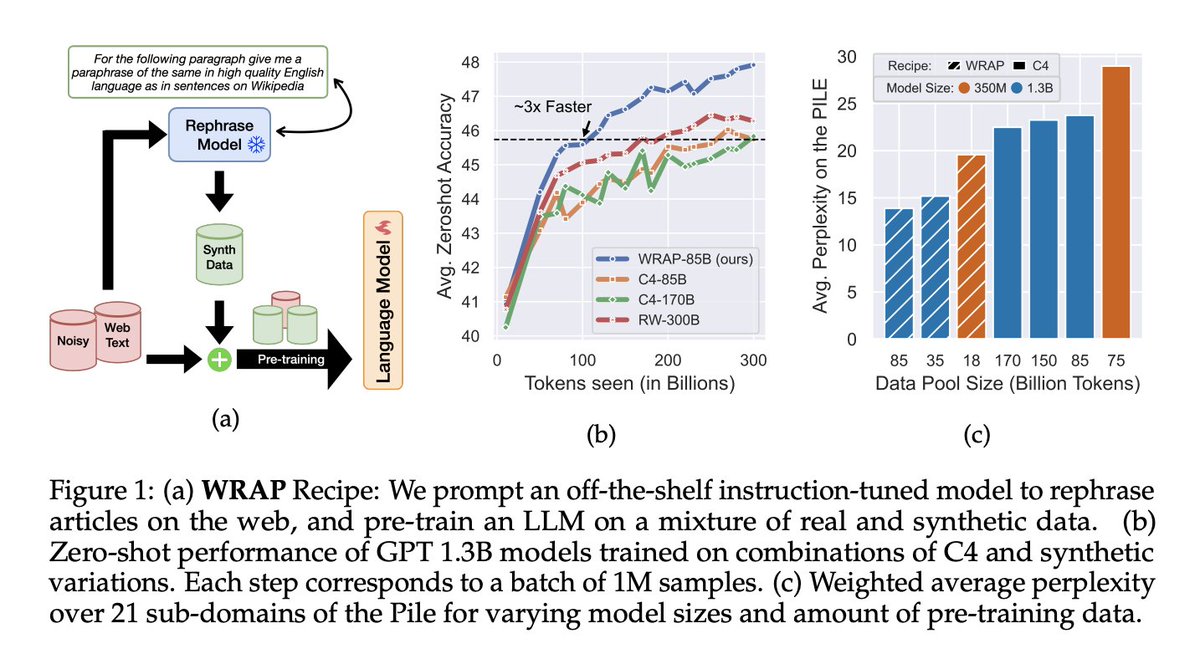

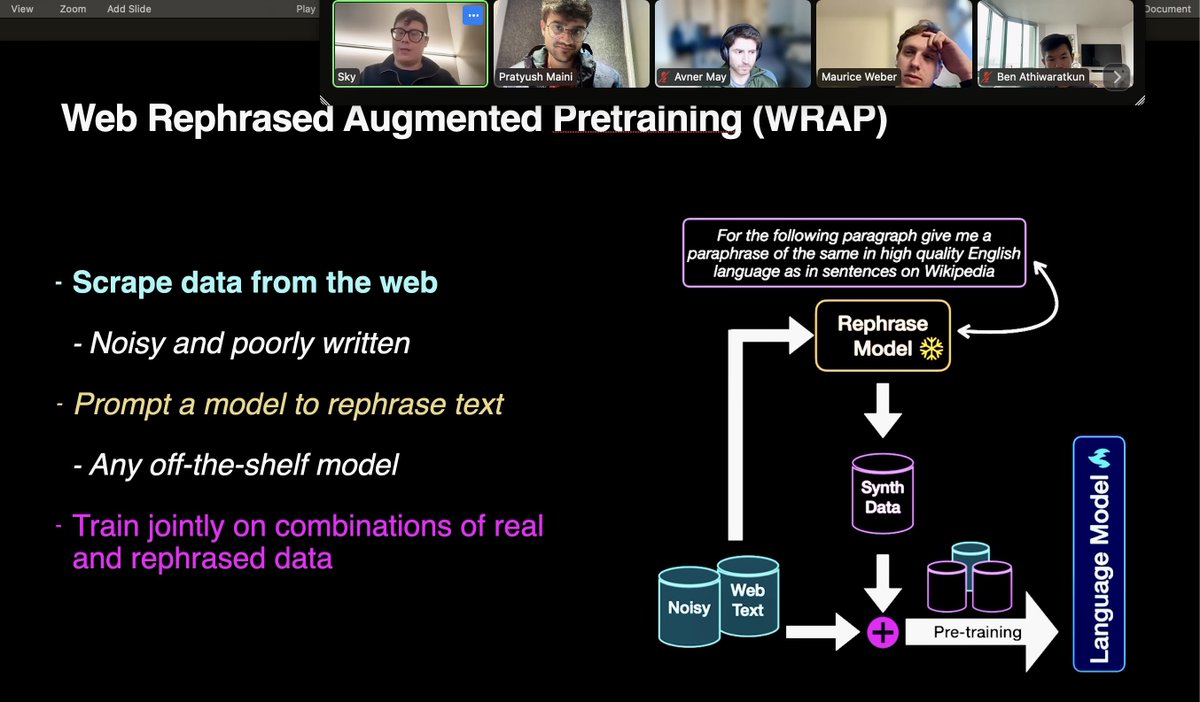

Enjoyed giving a talk on Rephrasing the Web at SambaNova Systems & Together AI over the past few days. Excited to see interest in synth data for pre-training LLMs.

Lots of interesting Qs concerning bias & factuality of synth data. Very important problems for future research!

We recently hosted Pratyush Maini at SambaNova Systems to talk about their work “Rephrasing the Web: A Recipe for Compute and Data-Efficient Language Modeling”

TLDR: By just rephrasing your existing datasets, you can achieve the same pre-training accuracy 3x faster with far lesser…