Robin Jia

@robinomial

Assistant Professor @CSatUSC | Previously Visiting Researcher @facebookai | Stanford CS PhD @StanfordNLP

ID:1012392833834029056

https://robinjia.github.io/ 28-06-2018 17:50:35

172 Tweets

3,2K Followers

759 Following

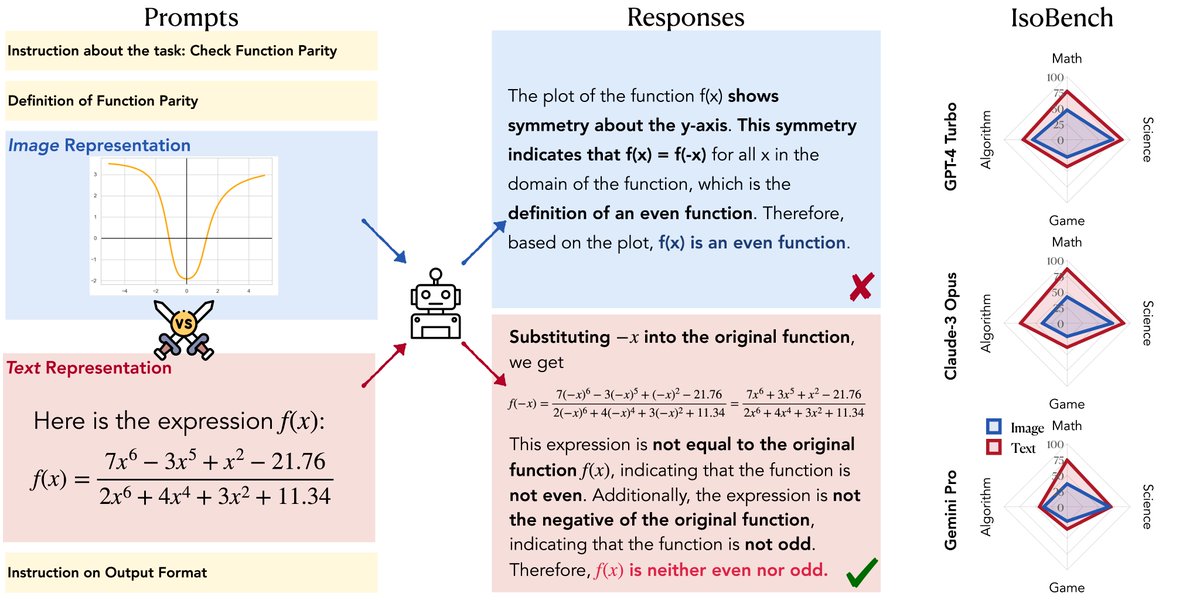

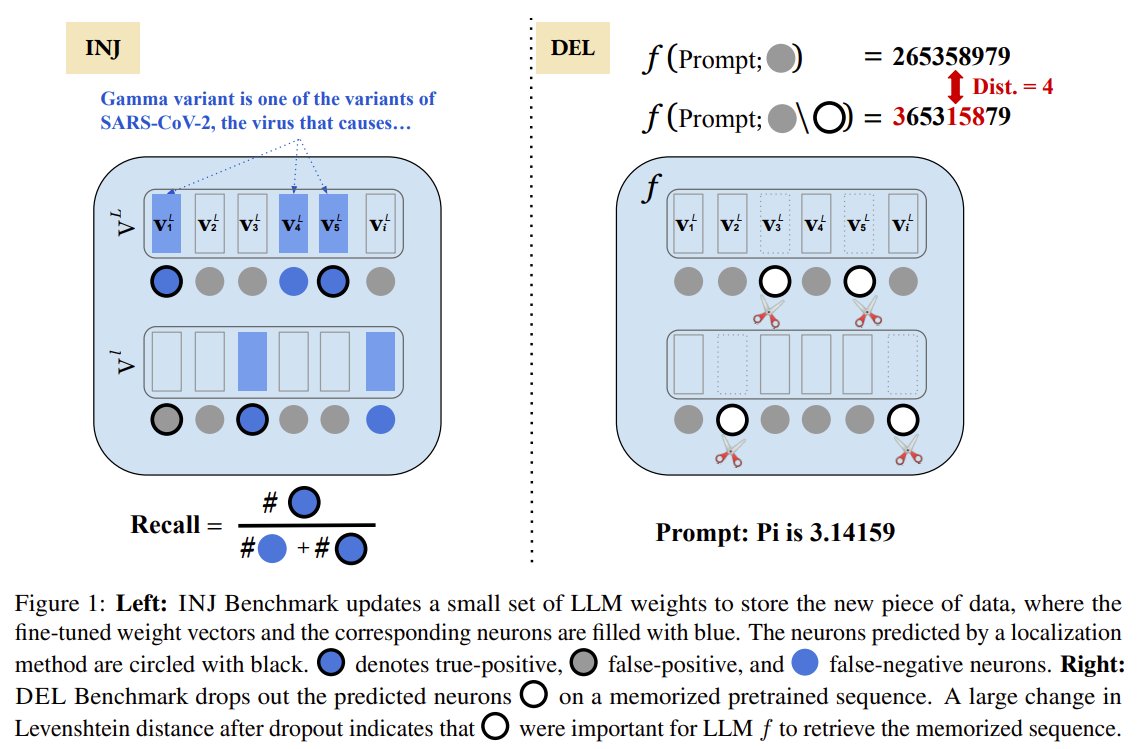

Localization in LLMs is often mentioned. But do localization methods actually localize correctly? In our #NAACL2024 paper, we (w/ Jesse Thomason, Robin Jia) develop two benchmarking ways to directly evaluate how well 5 existing methods can localize memorized data in LLMs.

Join us for the USC Symposium on Frontiers of Generative AI Models in Science and Society!

Featuring special guests Alessandro Vespignani Nitesh Chawla Yizhou Sun Jian Ma & Robin Jia Yue Wang Ruishan Liu USC Viterbi School

📅 Mar 25

📍MCB 101

🔗RSVP (limited space) tinyurl.com/4zpmfysa

Determining whether an LLM has trained on your data isn’t a classification problem, it’s a statistical testing problem. Really proud of this work by Johnny Tian-Zheng Wei and Ryan Yixiang Wang on using random watermarks to rigorously detect data usage!

I’m at #NeurIPS23 and on the job market🎷🧳!! Come and talk about anything LLM reasoning, evaluating communicating agents, human-AI collaboration for new discoveries, coffee and jazz in NOLA☕️

hey EMNLP 2024 #EMNLP2023 i lost my bucket hat 😢 it’s white w yellow bananas 🍌 on it like in my profile, pls DM me if found 🙏🏻

We're hiring! USC Thomas Lord Department of Computer Science is growing fast, with multiple openings for tenure-track and tenured positions.

🔍Security/privacy, AI, machine learning, data science, HCI, but exceptional candidates in all areas considered.

📅Deadline: Jan 5

🔗Details: tinyurl.com/5e99bmb8

We are at #EMNLP2023 this week! 👋

Explore the latest USC Thomas Lord Department of Computer Science USC ISI research spanning social bias in name translation, AI tools for journalists, ambiguity in LMs, and much more ⬇️

viterbischool.usc.edu/news/2023/12/u…

USC Viterbi School EMNLP 2024 USC Research #NLP

It's a great honor to receive this award! Had so much fun gathering together at SoCalNLP today! #SoCalNLP2023 🎉

ICL reduces the need for labeled *training* data, but what about test data? Fantastic work led by USC undergrad Harvey Yiyun Fu (applying to PhD programs this year!) shows we can estimate ICL accuracy well (on par with 40 labeled examples) using *unlabeled* data + model uncertainties