Ryan Steed

@ryanbsteed

PhD student @HeinzCollege @CarnegieMellon | privacy, fairness, & algorithmic systems • @[email protected]

ID:1191093583018889218

https://rbsteed.com 03-11-2019 20:43:51

160 Tweets

394 Followers

422 Following

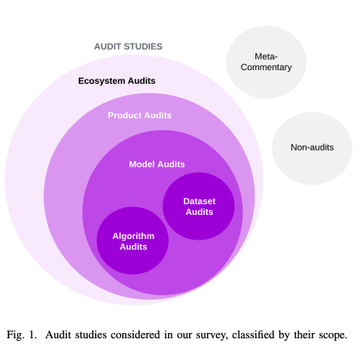

zhifeng kong kamalikac The other #SaTML2024 Distinguished Paper Award goes to SoK: AI auditing: The Broken Bus on the Road to AI Accountability by Abeba Birhane Ryan Steed Ojewale Victor

Briana Vecchione Deb Raji arxiv.org/abs/2401.14462 2/4

🧵Delving into this substantial paper mapping AI audit tools ecosystem from Ojewale Victor Deb Raji Abeba Birhane Ryan Steed & Briana Vecchione.

I'm thrilled to see World Privacy Forum's complementary report on AI Governance Tools around the world & emerging problems is cited throughout.🙏 😊

National Institute of Standards and Technology Big Brother Watch In any case, all this debate on accuracy scores is a DISTRACTION when the technology threatens fundamental issues such as rights to assembly.

Deployment of FRT for policing will alter Irish society for the worst, irreversibly, accurate or not.

18/

Ireland is in the midst of a heated debate on whether to legislate for police use of FRT. The Gardaí (Irish police) are adamant they need FRT at any cost

They are using this National Institute of Standards and Technology report (pages.nist.gov/frvt/html/frvt…) to claim 99% accuracy, which is deceptive & misleading

1/

New preprint dropped! arxiv.org/abs/2401.15897

In it, Zachary Lipton, Hoda Heidari, Anusha Sinha, and I scrutinize and critique generative AI red-teaming practices as found in-the-wild. 🧵(1/n)

A part of the Interesting work we have been doing on the Mozilla Open Source Audit Tooling(OAT) project in trying to understand the current state of AI auditing vis a vis accountability.

Abeba Birhane Ryan Steed Briana Vecchione Deb Raji

New paper from Ryan Steed, Ojewale Victor, Briana Vecchione, Deb Raji & I.

'AI auditing: The Broken Bus on the Road to AI Accountability' arxiv.org/abs/2401.14462.

We review & taxonomize the current audit landscape & assess impact and effectiveness.

long 🧵

1/

.Abeba Birhane’s work is foundational, cite it!

It’s great that the cesspools that constitute training data are getting more attention (they are perpetually overlooked in “responsible” AI work), but it’s harmful and counterproductive to ignore unmissable studies like theirs