We introduce the first multimodal instruction tuning dataset: 🌟MultiInstruct🌟 in our 🚀 #ACL2023NLP 🚀 paper. MultiInstruct consists of 62 diverse multimodal tasks and each task is equipped with 5 expert-written instructions.

🚩arxiv.org/abs/2212.10773🧵[1/3]

![zhiyang xu (@zhiyangx11) on Twitter photo 2023-06-11 20:06:19 We introduce the first multimodal instruction tuning dataset: 🌟MultiInstruct🌟 in our 🚀#ACL2023NLP🚀 paper. MultiInstruct consists of 62 diverse multimodal tasks and each task is equipped with 5 expert-written instructions.

🚩arxiv.org/abs/2212.10773🧵[1/3] We introduce the first multimodal instruction tuning dataset: 🌟MultiInstruct🌟 in our 🚀#ACL2023NLP🚀 paper. MultiInstruct consists of 62 diverse multimodal tasks and each task is equipped with 5 expert-written instructions.

🚩arxiv.org/abs/2212.10773🧵[1/3]](https://pbs.twimg.com/media/FyXZnKoaEAAmew1.jpg)

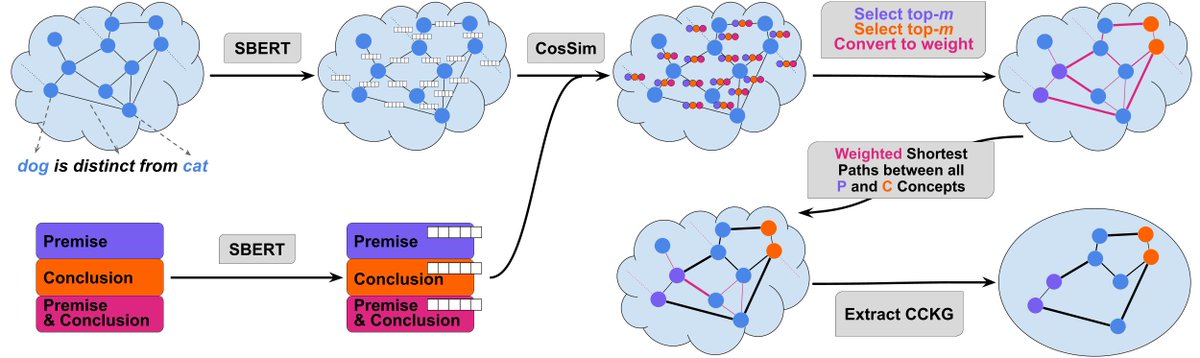

Happy to share my first paper “Similarity-weighted Construction of Contextualized Commonsense Knowledge Graphs for Knowledge-intense Argumentation Tasks”, accepted at #ACL2023NLP 🥳

📜 arxiv.org/abs/2305.08495

🎥 youtube.com/watch?v=aA5kPg…

1/n

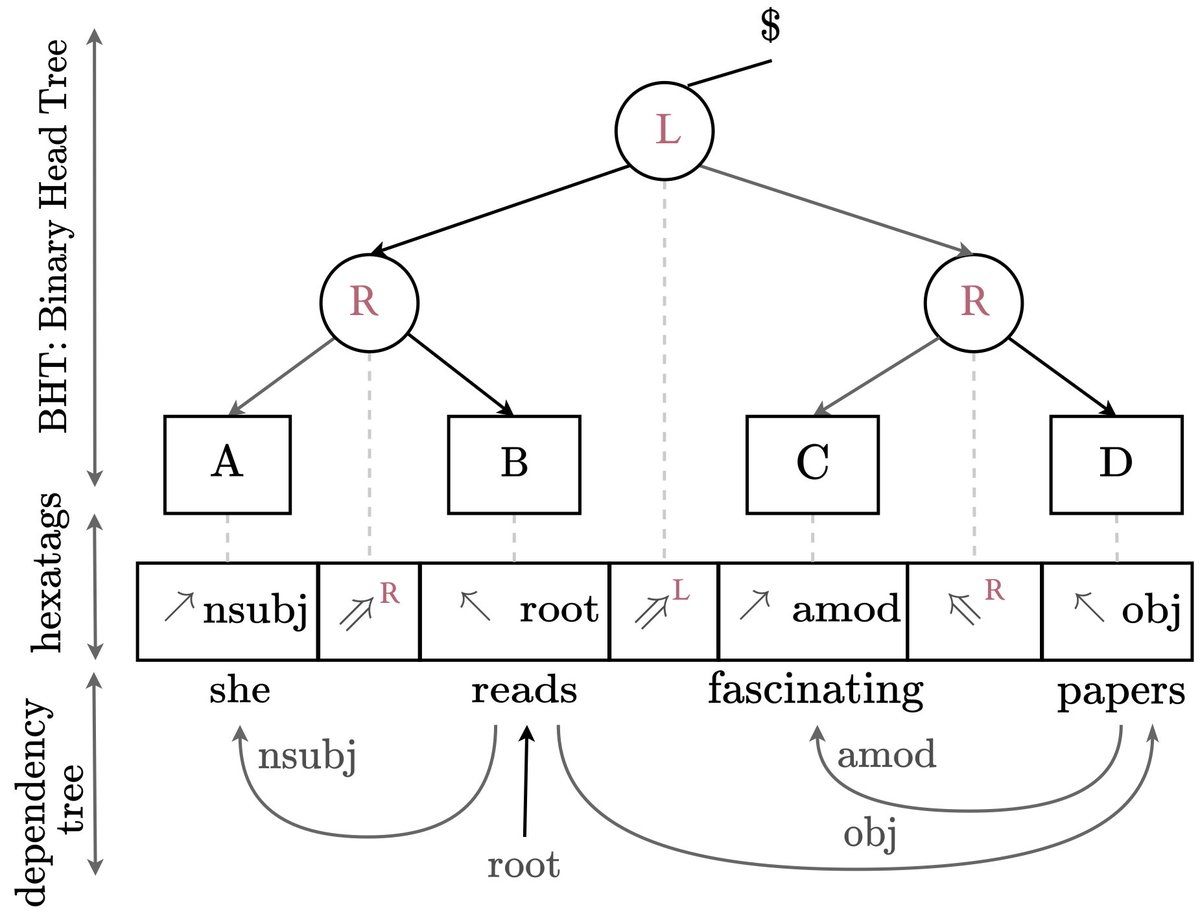

Are you a big fan of structure?

Have you ever wanted to apply the latest and greatest large language model out-of-the-box to parsing?

Are you a secret connoisseur of linear-time dynamic programs?

If you answered yes, our outstanding #ACL2023NLP paper may be just right for you!

主著論文がFindings of ACL 2023に採択されました!

“Kanbun-LM: Reading and Translating Classical Chinese in Japanese Methods by Language Models”

漢文の自動返り点付与と書き下し文生成に関する研究です。マイナーな分野ですが、漢文教育の発展に繋げられると期待しています!

#ACL2023NLP

📢New paper to appear at #acl2023nlp : arxiv.org/abs/2212.10534

Human-quality counterfactual data with no humans! Introduce DISCO, our novel distillation framework that automatically generates high-quality, diverse, and useful counterfactual data at scale using LLMs.

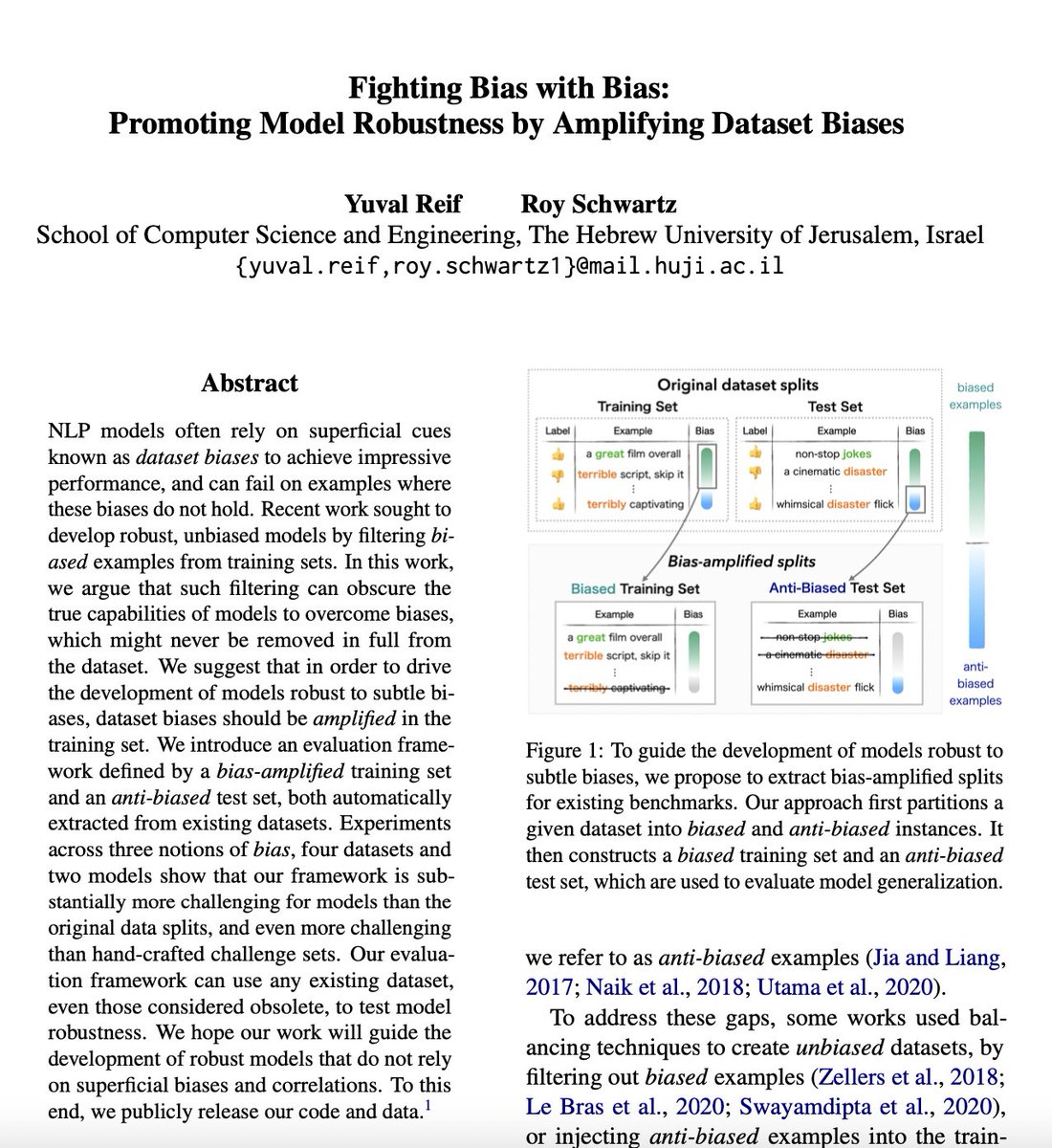

Is dataset debiasing the right path to robust models?

In our work, “Fighting Bias with Bias”, we argue that in order to promote model robustness, we should in fact amplify biases in training sets.

w/ Roy Schwartz

In #ACL2023NLP Findings

Paper: arxiv.org/abs/2305.18917

🧵👇

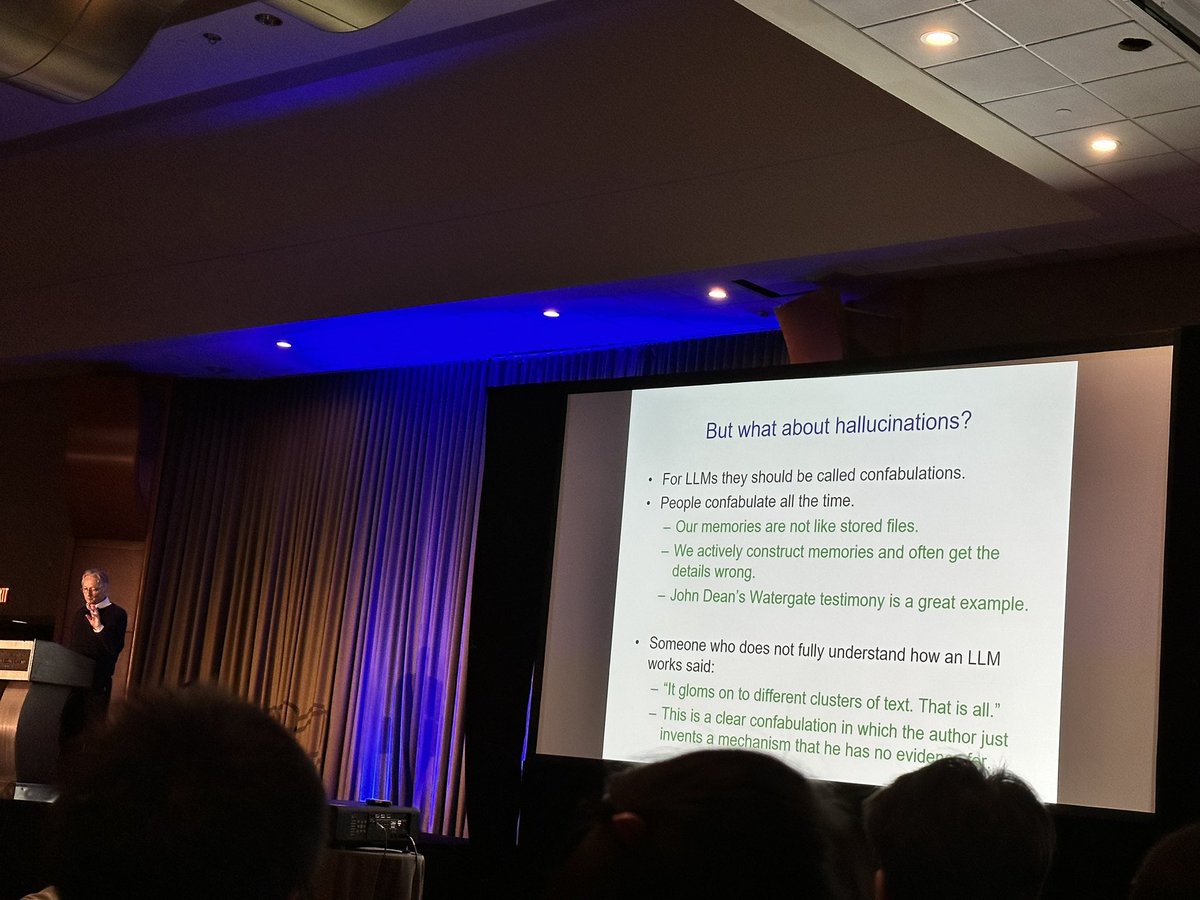

Geoffrey Hinton ( #ACL2023NLP keynote address): LLMs’ hallucinations should be called confabulations

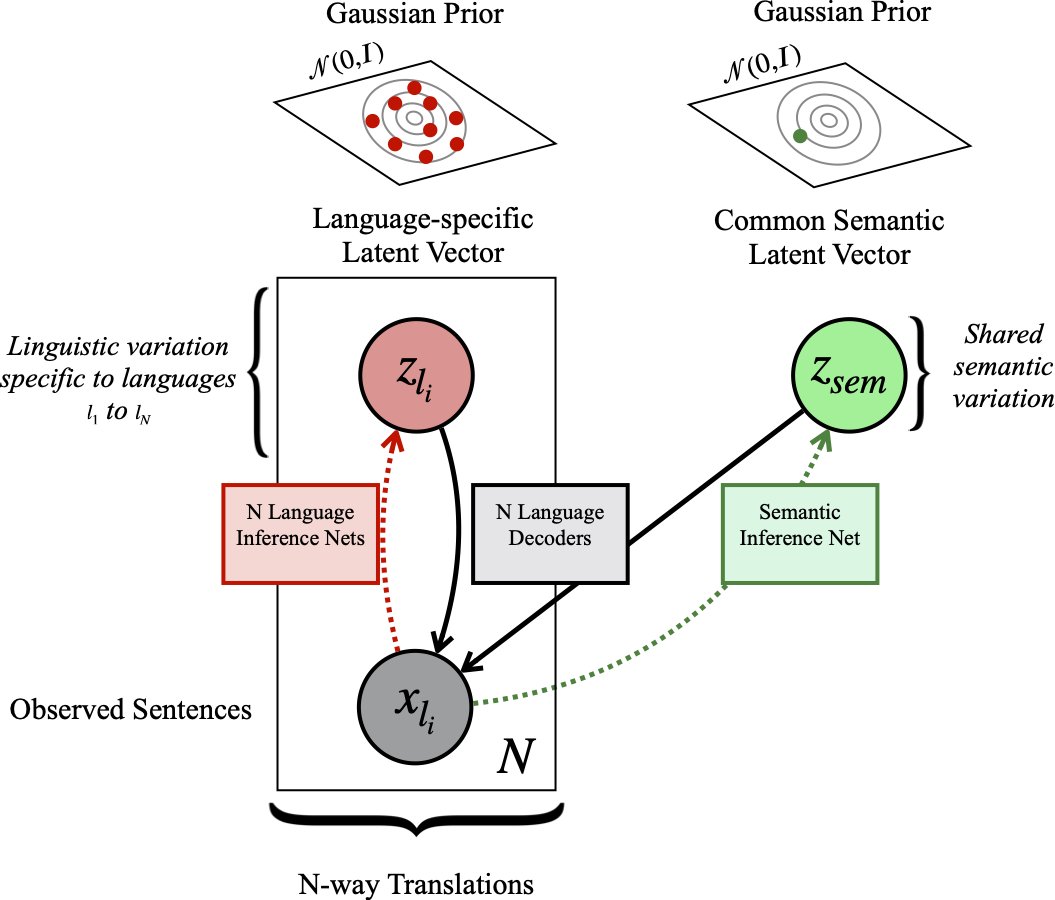

Want to try a new multilingual sentence retriever, free of the quirks and demands of contrastive learning? Read about VMSST, our new variational source-separation method. Improves quality and trains with small batches!

To appear at #ACL2023NLP .

Arxiv: arxiv.org/abs/2212.10726

I received an email earlier today saying that my visa application to attend #ACL2023NLP in Canada is approved.

The conference was held five months ago in July 2023! 😑

#NLProc #AcademicTwitter

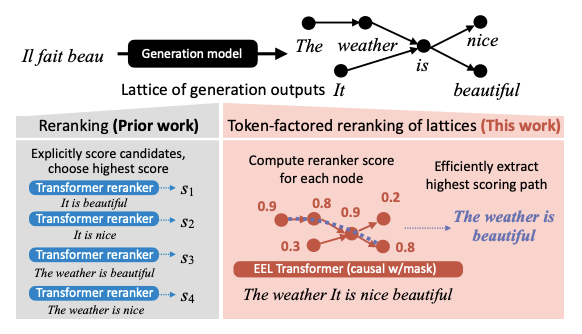

New #ACL2023NLP paper!

Reranking generation sets with transformer-based metrics can be slow. What if we could rerank everything at once? We propose EEL: Efficient Encoding of Lattices for fast reranking!

Paper: arxiv.org/abs/2306.00947 w/ Jiacheng Xu Xi Ye Greg Durrett

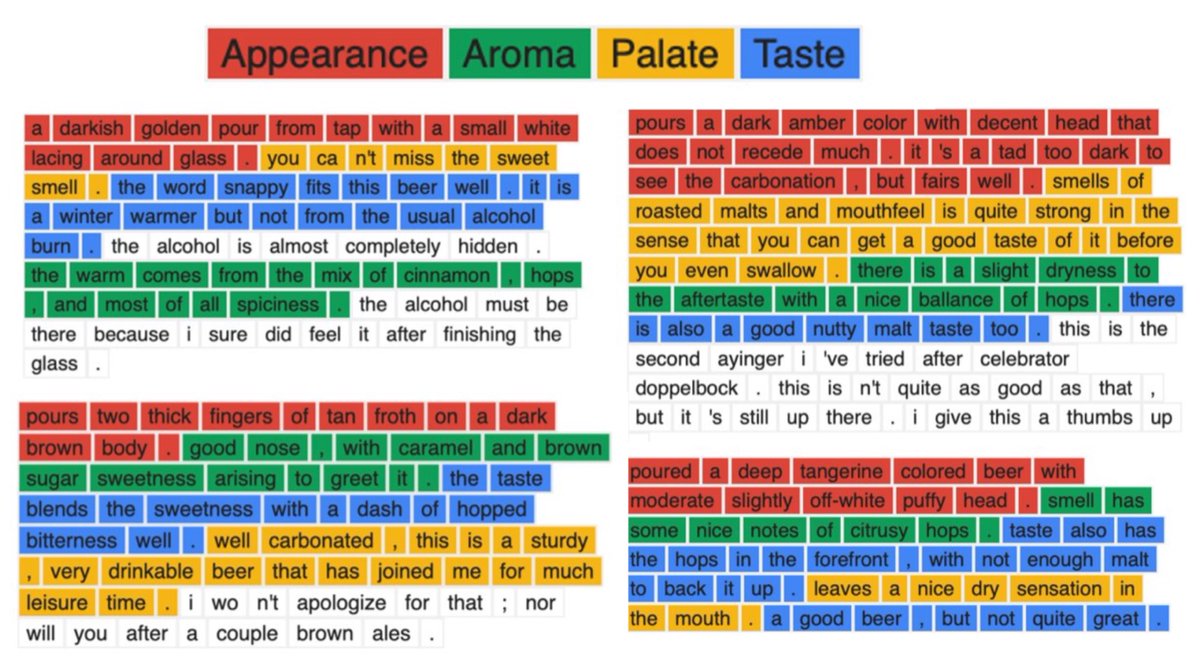

Chart captioning is hard, both for humans & AI.

Today, we’re introducing VisText: a benchmark dataset of 12k+ visually-diverse charts w/ rich captions for automatic captioning (w/ Angie Boggust Arvind Satyanarayan)

📄: vis.csail.mit.edu/pubs/vistext.p…

💻: github.com/mitvis/vistext

#ACL2023NLP

![Genta Winata (@gentaiscool) on Twitter photo 2023-05-26 00:39:04 Does an LLM forget when it learns a new language?

We systematically study catastrophic forgetting in a massively multilingual continual learning framework in 51 languages.

Preprint: arxiv.org/abs/2305.16252

⬇️🧵

The paper was accepted at #acl2023nlp findings #NLProc [1/4] Does an LLM forget when it learns a new language?

We systematically study catastrophic forgetting in a massively multilingual continual learning framework in 51 languages.

Preprint: arxiv.org/abs/2305.16252

⬇️🧵

The paper was accepted at #acl2023nlp findings #NLProc [1/4]](https://pbs.twimg.com/media/FxA6SQeWwAAZ_R7.jpg)

![Brihi Joshi (@BrihiJ) on Twitter photo 2023-05-15 07:17:39 Super excited to share our #ACL2023NLP paper! 🙌🏽

📢 Are Machine Rationales (Not) Useful to Humans? Measuring and Improving Human Utility of Free-Text Rationales

📑: arxiv.org/abs/2305.07095

🧵👇 [1/n]

#NLProc #XAI Super excited to share our #ACL2023NLP paper! 🙌🏽

📢 Are Machine Rationales (Not) Useful to Humans? Measuring and Improving Human Utility of Free-Text Rationales

📑: arxiv.org/abs/2305.07095

🧵👇 [1/n]

#NLProc #XAI](https://pbs.twimg.com/media/FwJr719XsAUMkC2.jpg)