#EMNLP2021 I am excited to introduce our EMNLP Finding paper ``Probing Across Time: What Does RoBERTa Know and When?”. We investigate the learning dynamics of RoBERTa with a diverse set of probes --- linguistics, factual, commonsense, and reasoning.

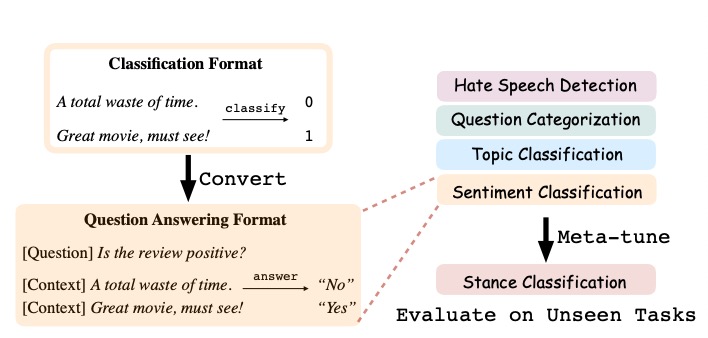

We can prompt language models for 0-shot learning ... but it's not what they are optimized for😢.

Our #emnlp2021 paper proposes a straightforward fix: 'Adapting LMs for 0-shot Learning by Meta-tuning on Dataset and Prompt Collections'.

Many Interesting takeaways below 👇

Dense retrieval models (e.g. DPR) achieve SOTA on various datasets. Does this really mean dense models are better than sparse models (e.g. BM25)?

No! Our #EMNLP2021 paper shows dense retrievers even fail to answer simple entity-centric questions.

arxiv.org/abs/2109.08535 (1/6)

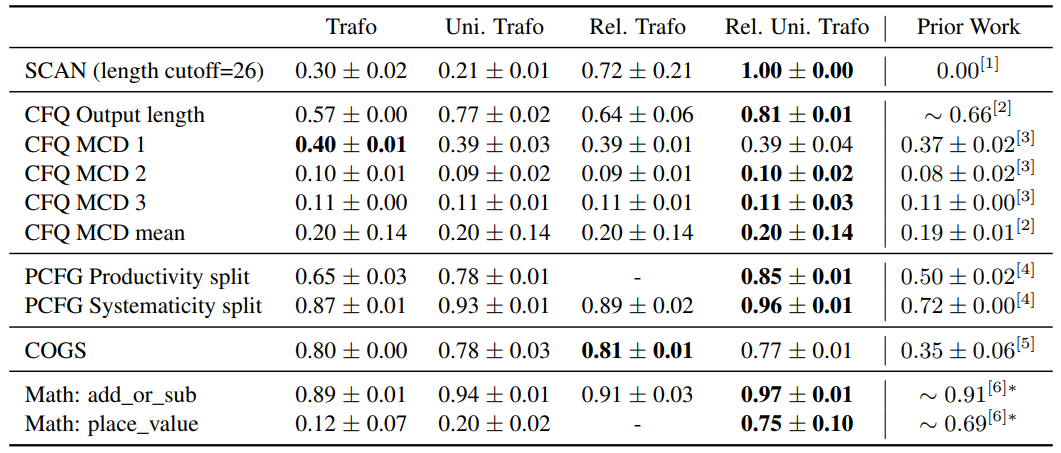

I'm happy to announce that our paper 'The Devil is in the Detail: Simple Tricks Improve Systematic Generalization of Transformers' has been accepted to #EMNLP2021 !

paper: arxiv.org/abs/2108.12284

code: github.com/robertcsordas/…

1/4

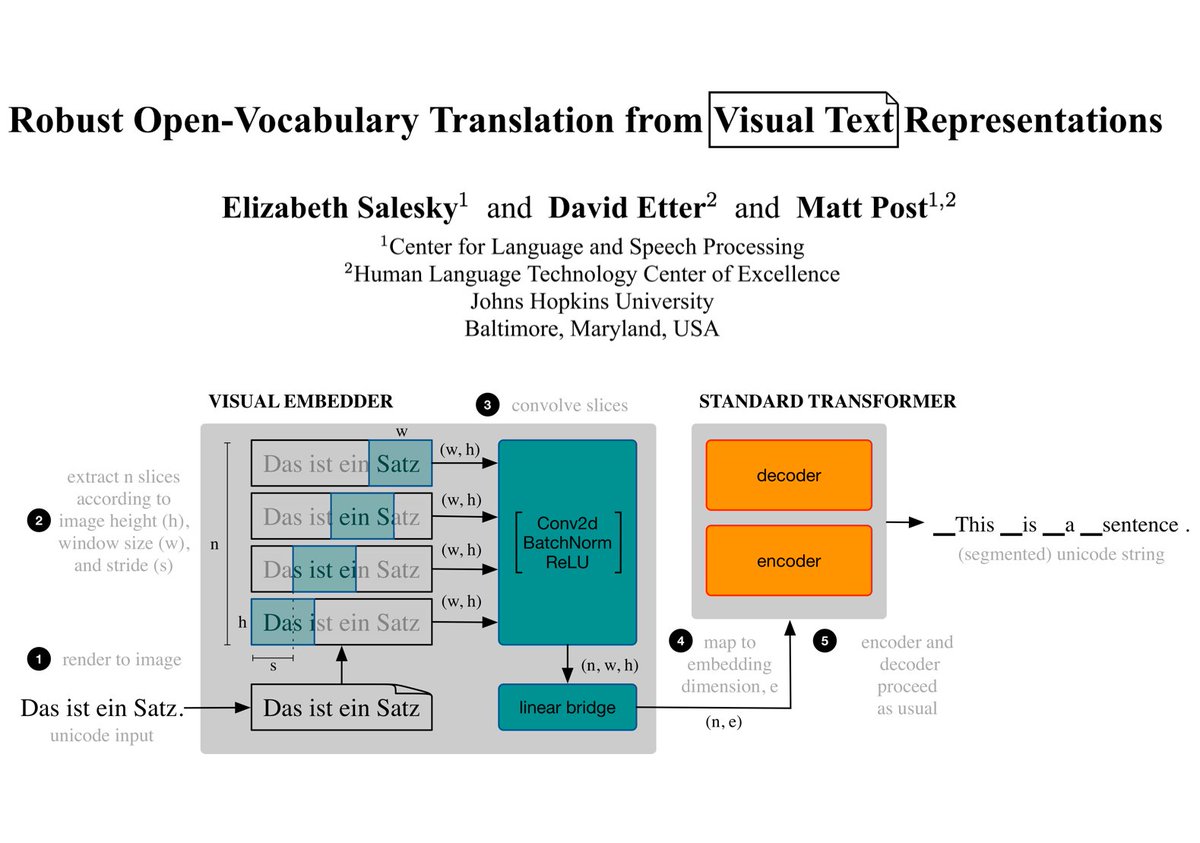

Our work on visual text representations will be presented at #EMNLP2021 !

Rather than unicode-based character or subword representations, we render text as images for translation, improving robustness (see 🧵).

Paper 📝: arxiv.org/abs/2104.08211

Code ⌨ : github.com/esalesky/visrep

Newish #EMNLP2021 work w/ Arturs Backurs & Karl Stratos: we try to generate text (in a data-to-text setting) by splicing together pieces of retrieved neighbor text.

Paper: arxiv.org/pdf/2101.08248…

1/3

Have you or a loved one used similarity measures like cosine similarity or L2 distance in transformer LMs?

Our #EMNLP2021 paper shows that a few 'rogue' dimensions consistently break sim. metrics in these models.

Luckily, there are some easy fixes (🧵)

arxiv.org/abs/2109.04404

Our #emnlp2021 paper analyzed masked LMs🔬considering residual connection and layer normalization in addition to attention.

Analysis revealed

- Attention has less impact than previously assumed

- BERT’s working relates to word frequency

- and so on!

📄 arxiv.org/abs/2109.07152

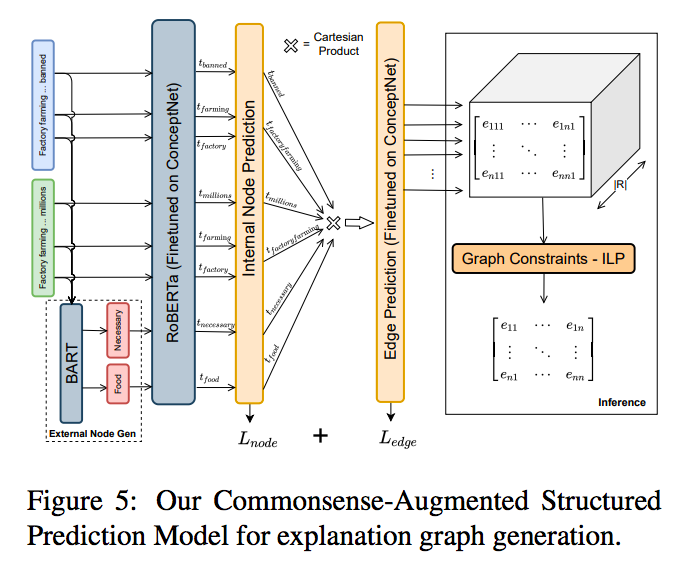

ExplaGraphs (to be presented at #EMNLP2021 ): Check out our website & new version with more+refined graph data, new structured models, new metrics (like graph-editdistance + graph-bertscore) & human eval + human-metric correlation😀

explagraphs.github.io

arxiv.org/abs/2104.07644

📢📜 #NLPaperAlert 🌟Knowledge Conflicts in QA🌟- what happens when facts learned in training contradict facts given at inference time? 🤔

How can we mitigate hallucination + improve OOD generalization? 📈

Find out in our #EMNLP2021 paper! [1/n]

arxiv.org/abs/2109.05052

![Shayne Longpre (@ShayneRedford) on Twitter photo 2021-09-22 15:59:44 📢📜#NLPaperAlert 🌟Knowledge Conflicts in QA🌟- what happens when facts learned in training contradict facts given at inference time? 🤔

How can we mitigate hallucination + improve OOD generalization? 📈

Find out in our #EMNLP2021 paper! [1/n]

arxiv.org/abs/2109.05052 📢📜#NLPaperAlert 🌟Knowledge Conflicts in QA🌟- what happens when facts learned in training contradict facts given at inference time? 🤔

How can we mitigate hallucination + improve OOD generalization? 📈

Find out in our #EMNLP2021 paper! [1/n]

arxiv.org/abs/2109.05052](https://pbs.twimg.com/media/E_5rq8ZVQAQqGwR.png)

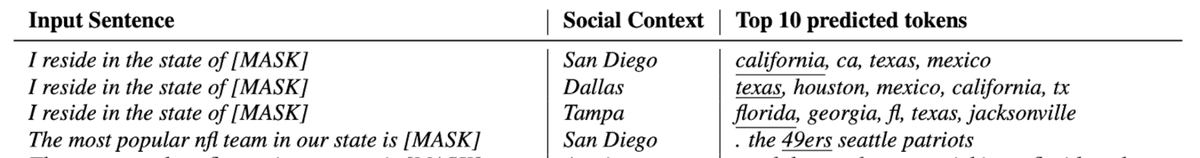

How can you incorporate social factors (for eg. time, geography) which influence language use and understanding into large-scale LM's? With Shubhanshu Mishra and Aria Haghighi, we propose a simple pre-training method for this. arxiv.org/abs/2110.10319 (Findings of EMNLP 2021) #emnlp2021

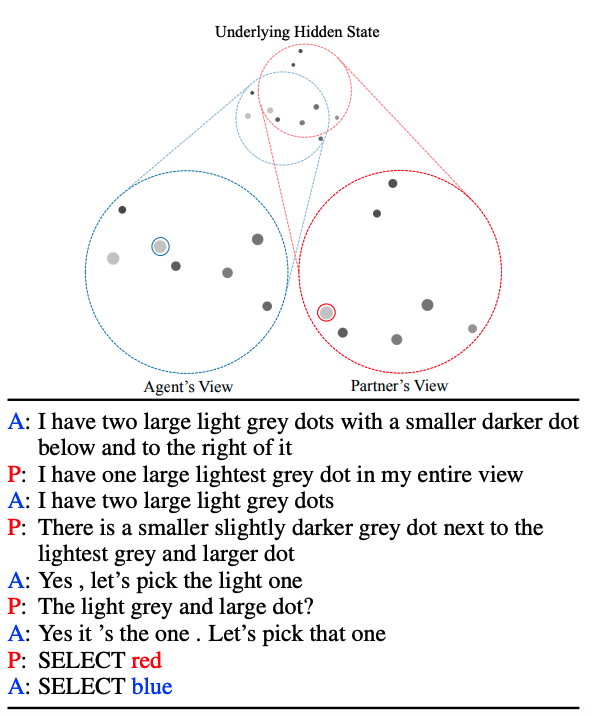

We built a pragmatic, grounded dialogue system that improves pretty substantially in interactions with people in a challenging grounded coordination game. Real system example below! Work with Justin Chiu and Dan Klein, upcoming at #EMNLP2021 .

Paper: arxiv.org/abs/2109.05042

📢 Interested in speech, multilinguality, and NN spaces?

Our paper 'How Familiar Does That Sound? Cross-Lingual Representational Similarity Analysis of Acoustic Word Embeddings' is coming out in #BlackboxNLP #EMNLP2021

📝arxiv.org/pdf/2109.10179…

🐍github.com/uds-lsv/xRSA-A…

1/🧵

A surprisal–duration trade-off across and within the world’s languages!

Analysing 600 languages, we find evidence of this trade-off: cross-linguistically; and within 319 of them.

We conclude less surprising phones are produced faster.

#EMNLP2021

arxiv.org/abs/2109.15000

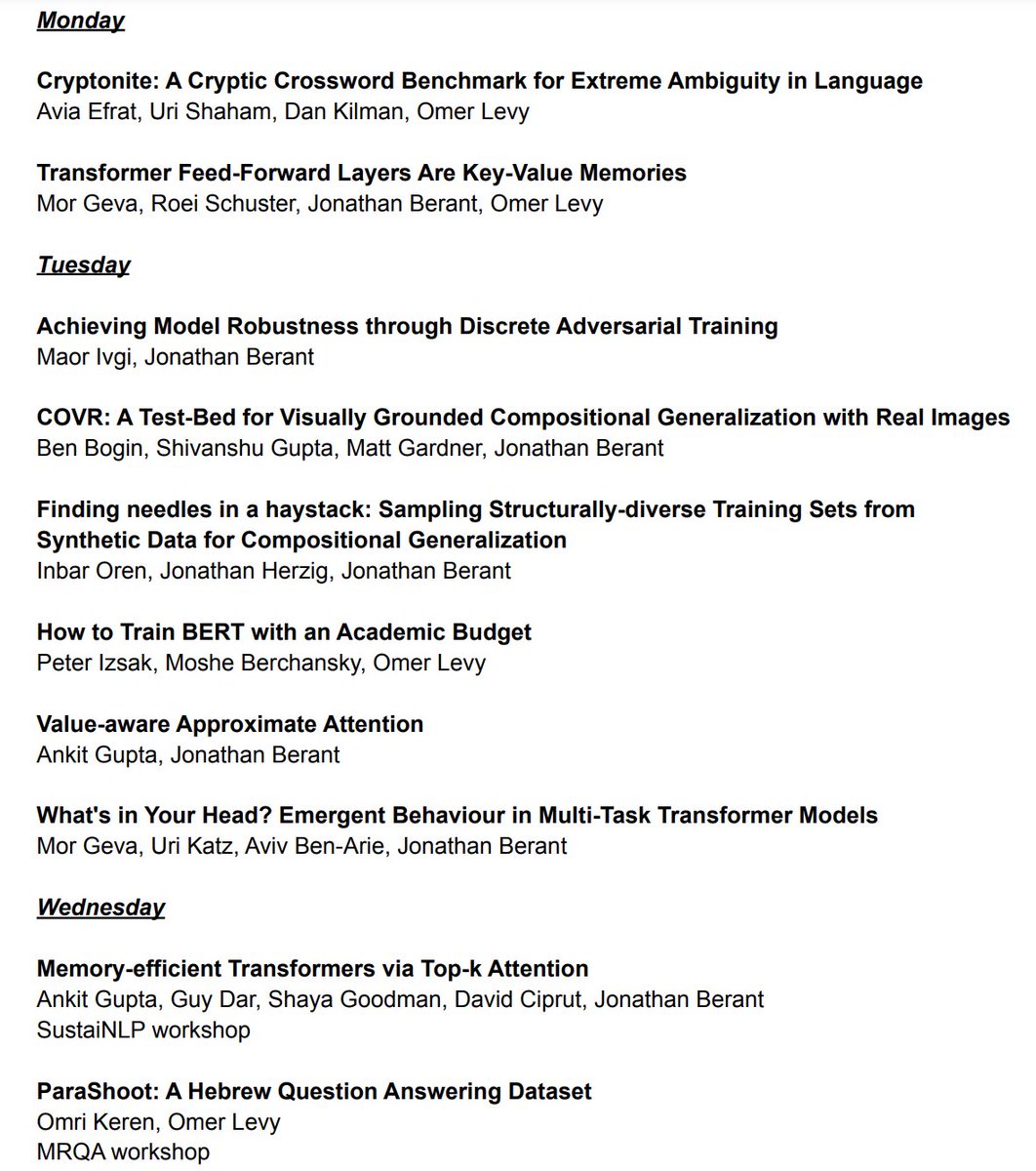

Challenging benchmarks, transformers and their analysis, compositional generalization, robustness, and a lot more cool work from TAU-NLP presented at #emnlp2021 this week, check it out (click the image to see all papers...)! We have a strong in-person presence so come say hi...

![Caleb Ziems (@cjziems) on Twitter photo 2021-09-14 15:15:51 Politics can skew the news and shape our understanding of big issues. But how do some details change how we feel about key actors? Our #EMNLP2021 Findings (w/ @Diyi_Yang) answers this with computational analysis of 82k articles on police violence arxiv.org/pdf/2109.05325…

[1/9] Politics can skew the news and shape our understanding of big issues. But how do some details change how we feel about key actors? Our #EMNLP2021 Findings (w/ @Diyi_Yang) answers this with computational analysis of 82k articles on police violence arxiv.org/pdf/2109.05325…

[1/9]](https://pbs.twimg.com/media/E_QU5nuWQAgRZWQ.jpg)