Does your LLM know what a pizza looks like? You need a Vision Language Model. Here at Hugging Face we have just added VLM finetuning support to TRL's SFTTrainer.

Doing a live Q&A in 1 hr! (10AM New York time) Will do a live 2x faster finetuning demo of Unsloth AI, showcase some community projects, then fully Q&A!

Ask any Q you like! Unsloth or just AI in general, maths etc! Can try record but unsure :( Zoom link: us06web.zoom.us/webinar/regist…

Finetuning controlnet with my favorite Pictomancer 🎨🖌️

#aivideo #AIArt #AIArt work #aianimation #stablevideodiffusion #AnimateDiff #SvD #aiartcommunity #aigirls #AI美女 #AIイラスト #stablediffusion

LinkedIn / Twitter post:

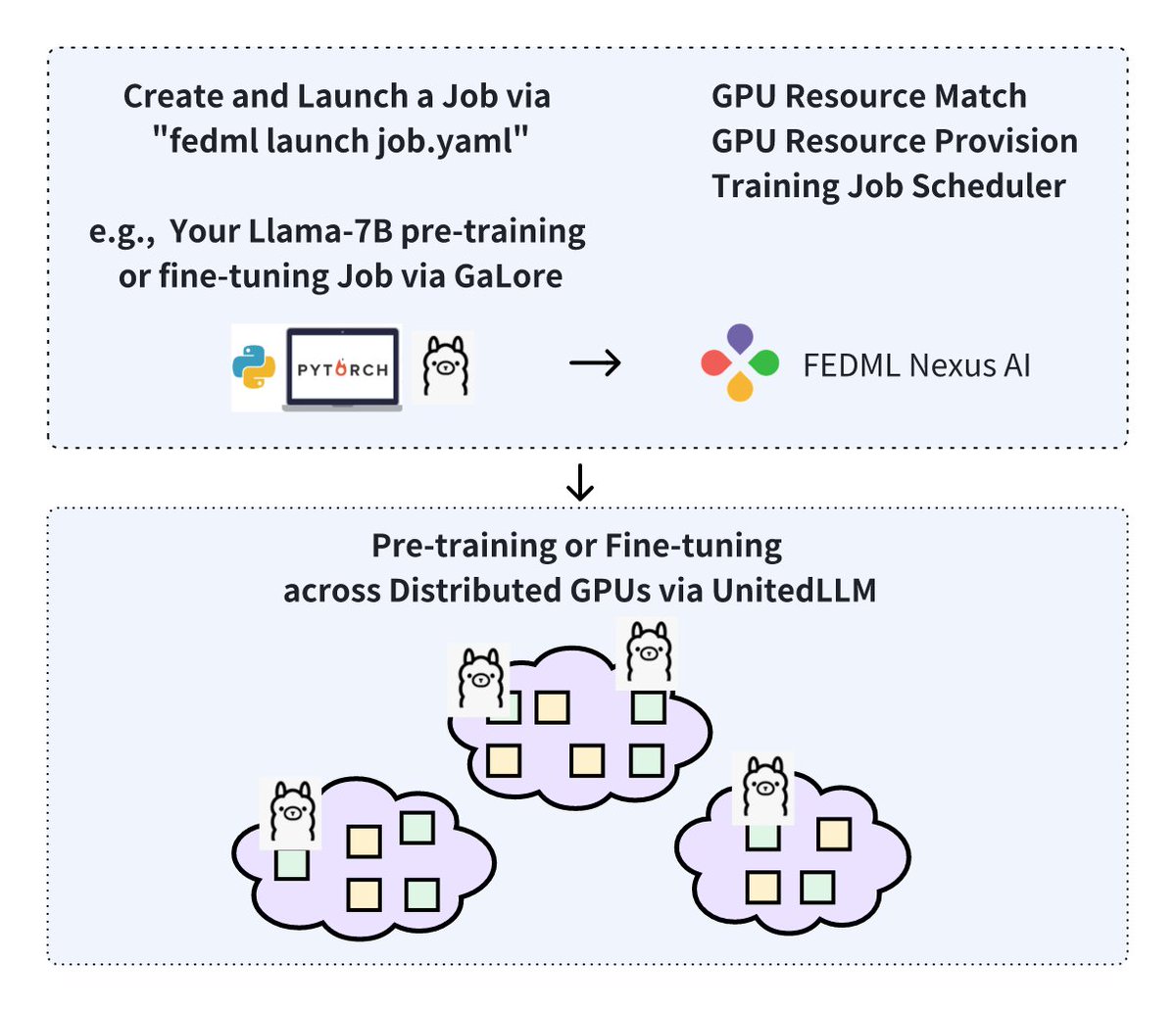

🚀 Exciting News! 🚀 #pretraining #finetuning #llm #GaLore #FEDML

🌟 FEDML Nexus AI platform now unlocks the pre-training and fine-tuning of LLaMA-7B on geo-distributed RTX4090s!

📈By supporting the newly developed GaLore as a ready-to-launch job in FEDML…

![Junyuan Hong (@hjy836) on Twitter photo 2024-04-09 03:25:23 [Finetuning can amplify the privacy risks of Generative AI (Diffusion Models)]

Last week, I was honored to give a talk at the Good Systems Symposium (gssymposium2024.splashthat.com), where I shared our recent work on the 🚨 privacy risks of Generative AI via finetuning. Our leading… [Finetuning can amplify the privacy risks of Generative AI (Diffusion Models)]

Last week, I was honored to give a talk at the Good Systems Symposium (gssymposium2024.splashthat.com), where I shared our recent work on the 🚨 privacy risks of Generative AI via finetuning. Our leading…](https://pbs.twimg.com/media/GKsUdhmXQAAYSMJ.png)