UCSB NLP Group

@ucsbNLP

The NLP Group @ University of California, Santa Barbara. Profs. @WilliamWangNLP, Xifeng Yan, Simon Todd, @CodeTerminator, @lileics; acct run by @m2saxon

ID:1417329311506264066

http://nlp.cs.ucsb.edu/ 20-07-2021 03:44:56

179 Tweets

1,4K Followers

735 Following

With all of the excitement of the past few months, it's time for a career update: 🎉I graduated with my PhD from UCSB NLP Group UC Santa Barbara 🥳and joined SynthLabs 🎊to drive open-science collaborations and push the boundaries on data strategies for synthetic data

👇I'm at #ICLR !

When LLMs make mistakes, can we build a model to pinpoint error, indicate its severity and error type? Can we incorporate this fine-grained info to improve LLM? We introduce LLMRefine [NAACL 2024], a simulated annealing method to revise LLM output at inference. Google AI UCSB NLP Group

![Wenda Xu (@WendaXu2) on Twitter photo 2024-04-02 18:39:14 When LLMs make mistakes, can we build a model to pinpoint error, indicate its severity and error type? Can we incorporate this fine-grained info to improve LLM? We introduce LLMRefine [NAACL 2024], a simulated annealing method to revise LLM output at inference. @GoogleAI @ucsbNLP When LLMs make mistakes, can we build a model to pinpoint error, indicate its severity and error type? Can we incorporate this fine-grained info to improve LLM? We introduce LLMRefine [NAACL 2024], a simulated annealing method to revise LLM output at inference. @GoogleAI @ucsbNLP](https://pbs.twimg.com/media/GKLjXF9XQAEizzm.jpg)

Huge congratulations to Alon Albalak for defending his PhD thesis “Understanding and Improving Models Through a Data-Centric Lens”. It’s refreshing to witness Alon’s growth, innovation, and leadership in the last few years. Alon is my 8th PhD graduate and I wish him all the best!

🤩 I'm honored that insights from our data selection survey are being shared across the globe 🌍

Fantastic slides Thomas Wolf !

Awesome new work with Sharon Levy and colleagues. I learned a lot about how to think about bias in LLMs from this study.

[New paper!] Can LLMs truly evaluate their own output? Can self-refine/self-reward improve LLMs? Our study reveals that LLMs exhibit biases towards their output. This self-bias gets amplified during self-refine/self-reward, leading to a negative impact on performance. UCSB NLP Group

![Wenda Xu (@WendaXu2) on Twitter photo 2024-02-22 18:22:12 [New paper!] Can LLMs truly evaluate their own output? Can self-refine/self-reward improve LLMs? Our study reveals that LLMs exhibit biases towards their output. This self-bias gets amplified during self-refine/self-reward, leading to a negative impact on performance. @ucsbNLP [New paper!] Can LLMs truly evaluate their own output? Can self-refine/self-reward improve LLMs? Our study reveals that LLMs exhibit biases towards their output. This self-bias gets amplified during self-refine/self-reward, leading to a negative impact on performance. @ucsbNLP](https://pbs.twimg.com/media/GG9X2luWEAIpNLc.jpg)

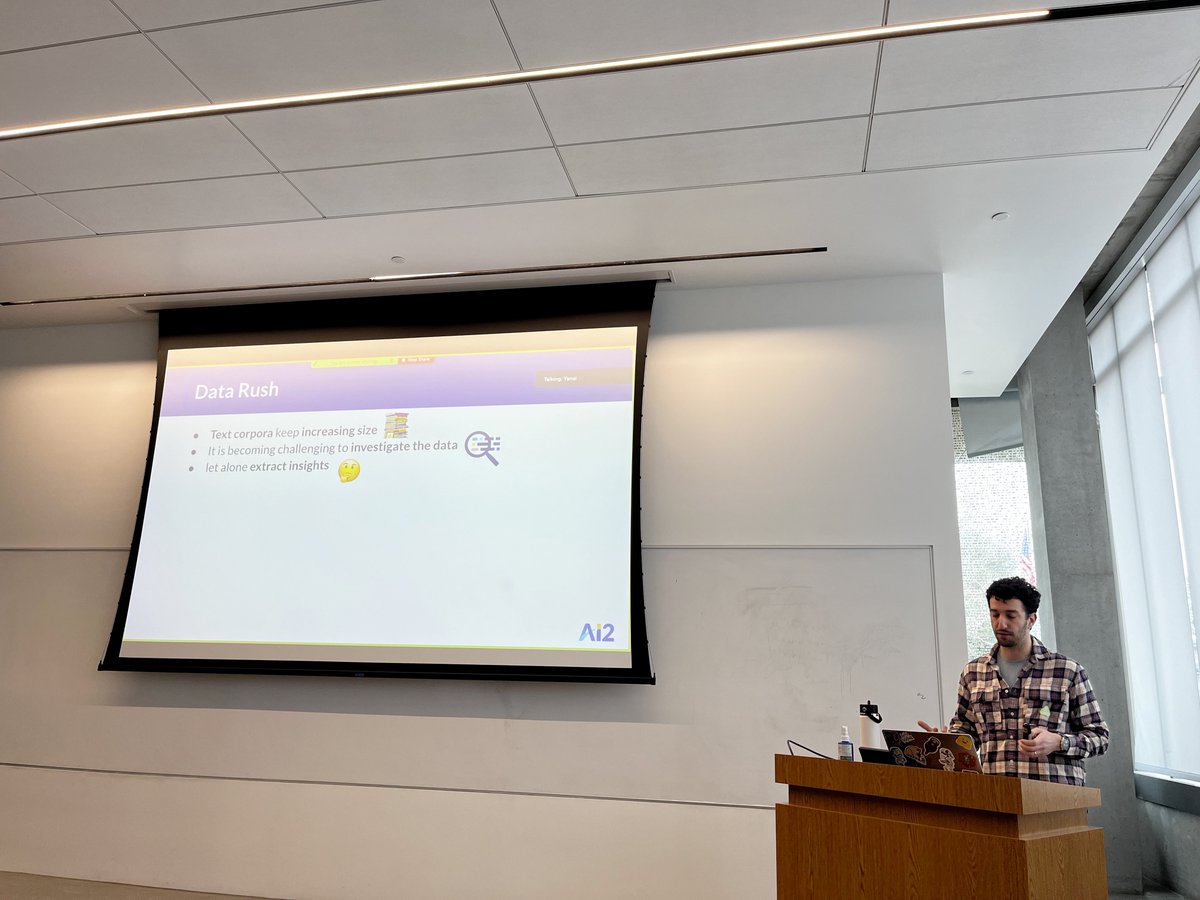

Thank you for joining us yesterday Yanai Elazar @ICLR! We are very excited by the 'What's In My Big Data' direction! wimbd.apps.allenai.org