Oreva Ahia

@orevaahia

PhD student @uwcse | ex: AI/ML Research Intern @apple | Co-organizer @AISaturdayLagos | Researcher @MasakhaneNLP

ID:836314434

https://orevaahia.github.io/ 20-09-2012 20:39:09

1,7K Tweets

1,4K Followers

971 Following

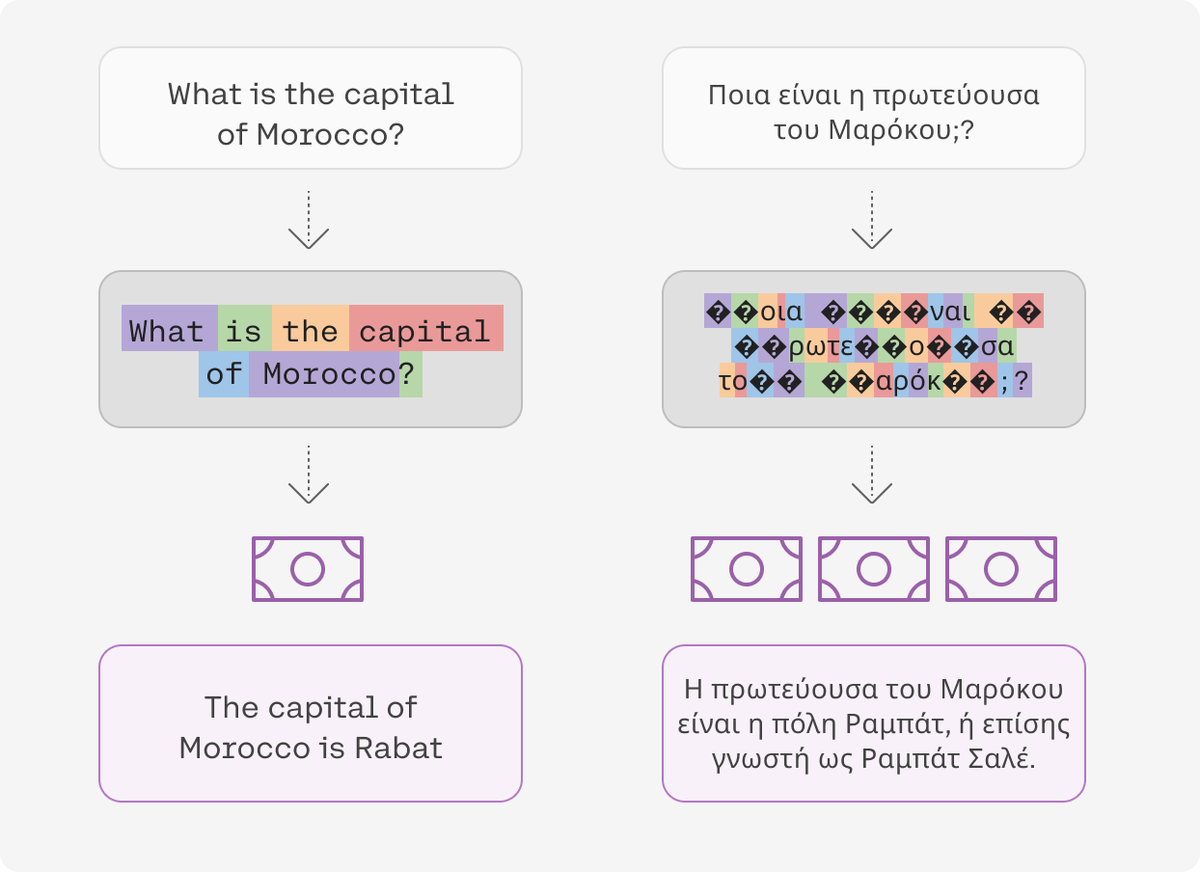

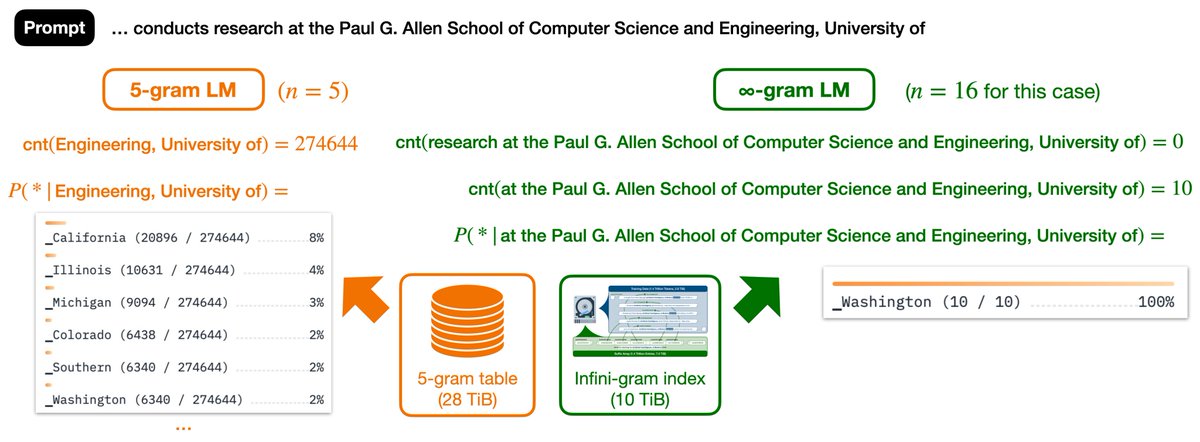

Shoutout to Oreva Ahia et al who wrote a great paper that revealed this issue! arxiv.org/abs/2305.13707

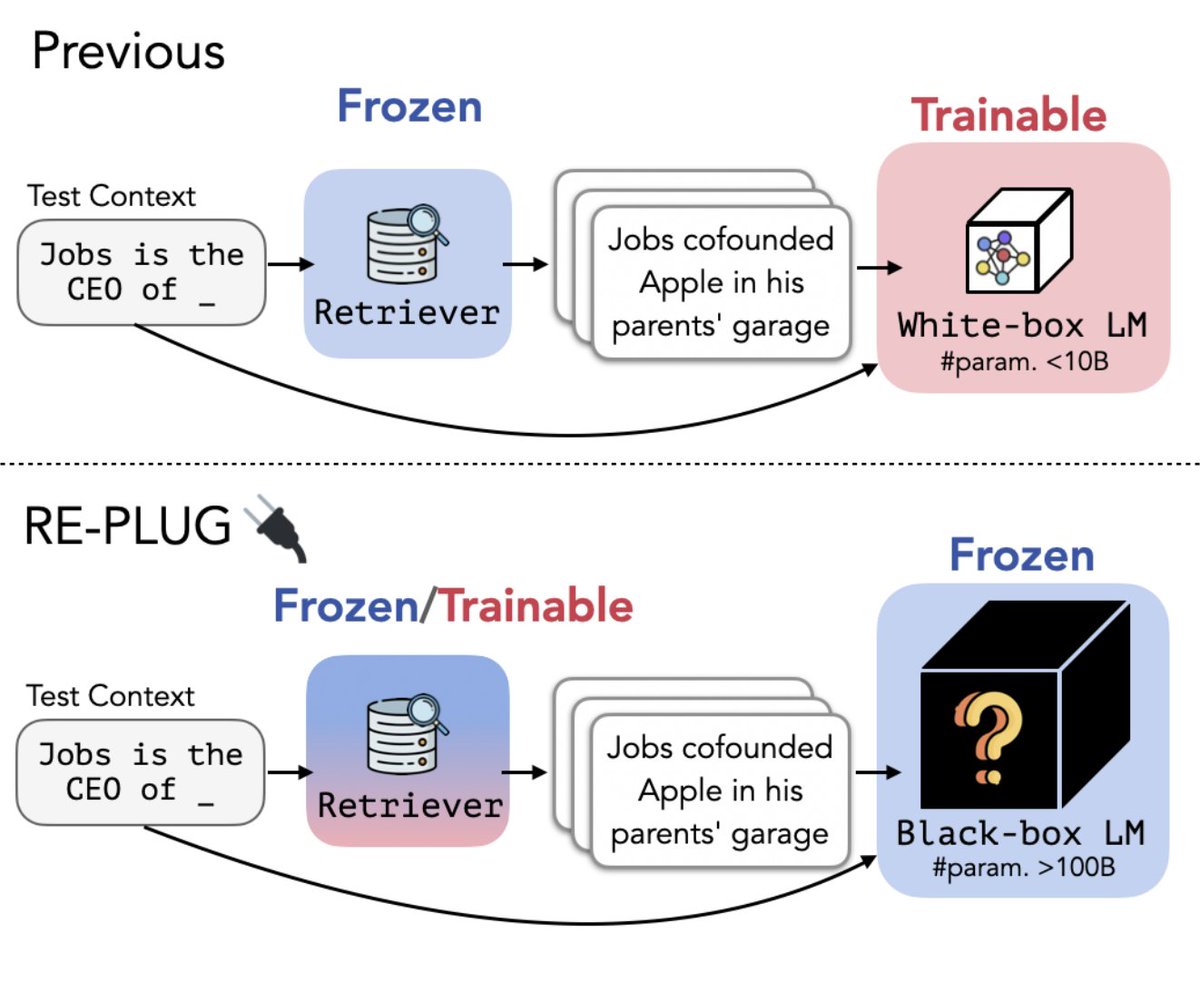

Happy to share REPLUG🔌 is accepted to #NAACL2024

We introduce a retrieval-augmented LM framework that combines a frozen LM with a frozen/tunable retriever. Improving GPT-3 in language modeling & downstream tasks by prepending retrieved docs to LM inputs.

📄:…

📢 Excited to share that our paper has been accepted to #NAACL2024 main conference! 🌟Huge shoutout to amazing co-authors Oreva Ahia tsvetshop Antonis Anastasopoulos @EACL!

⛱️ See you in Mexico City! 🇲🇽 #NLProc

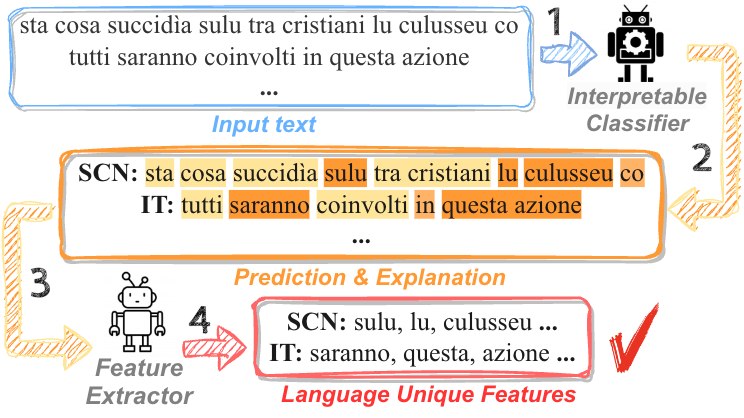

✨ Can we use interpretability methods to extract linguistic features that characterize dialects❓

🎉 New preprint: arxiv.org/abs/2402.17914 (Roy Xie, Oreva Ahia, tsvetshop, Antonis Anastasopoulos @EACL)

👉Code & Data: github.com/ruoyuxie/inter…

🧵(1/6)

📢 We are coming to Senegal 🇸🇳! 📢

We are excited to announce that the Deep Leaning Indaba 2024 will be held in Dakar, Senegal at Université Amadou Mahtar MBOW from the 1st to the 7th of September.

Applications launch 🚀 is just around the corner ! Don't miss it 🔔

#DLI2024

#Indaba2024

Happy to share that FormatSpread has been accepted to #ICLR2024 🎉

Extremely grateful to my advisors Yejin Choi tsvetshop (as always!), and to Alane Suhr / suhr @ sigmoid . social who was the best collaborator I could have asked for during this project!

See you all in Vienna 😀