Zico Kolter

@zicokolter

Associate professor at Carnegie Mellon, VP and Chief Scientist at Bosch Center for AI. Researching (deep) machine learning, robustness, implicit layers.

ID:841499391508779008

http://zicokolter.com 14-03-2017 04:01:04

524 Tweets

14,9K Followers

499 Following

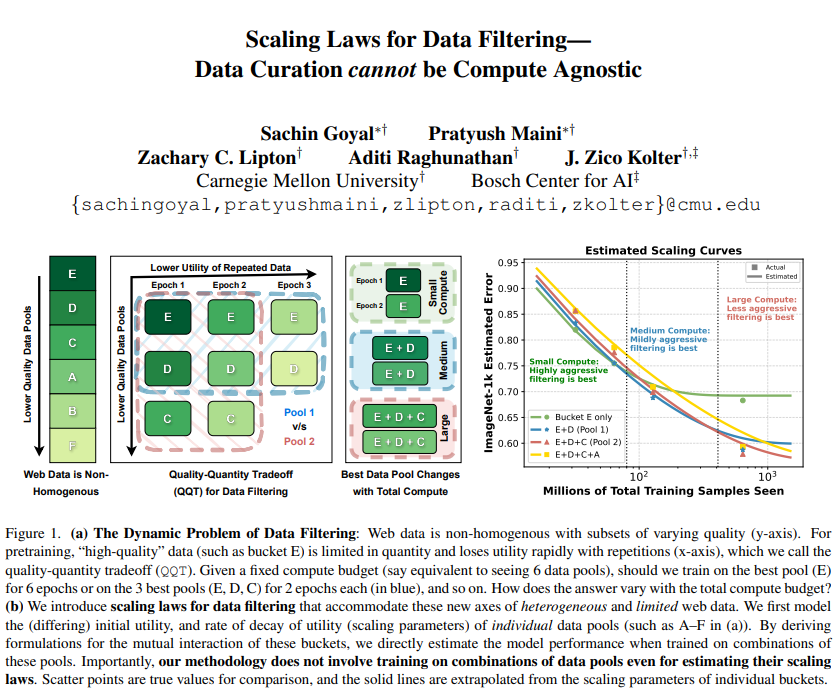

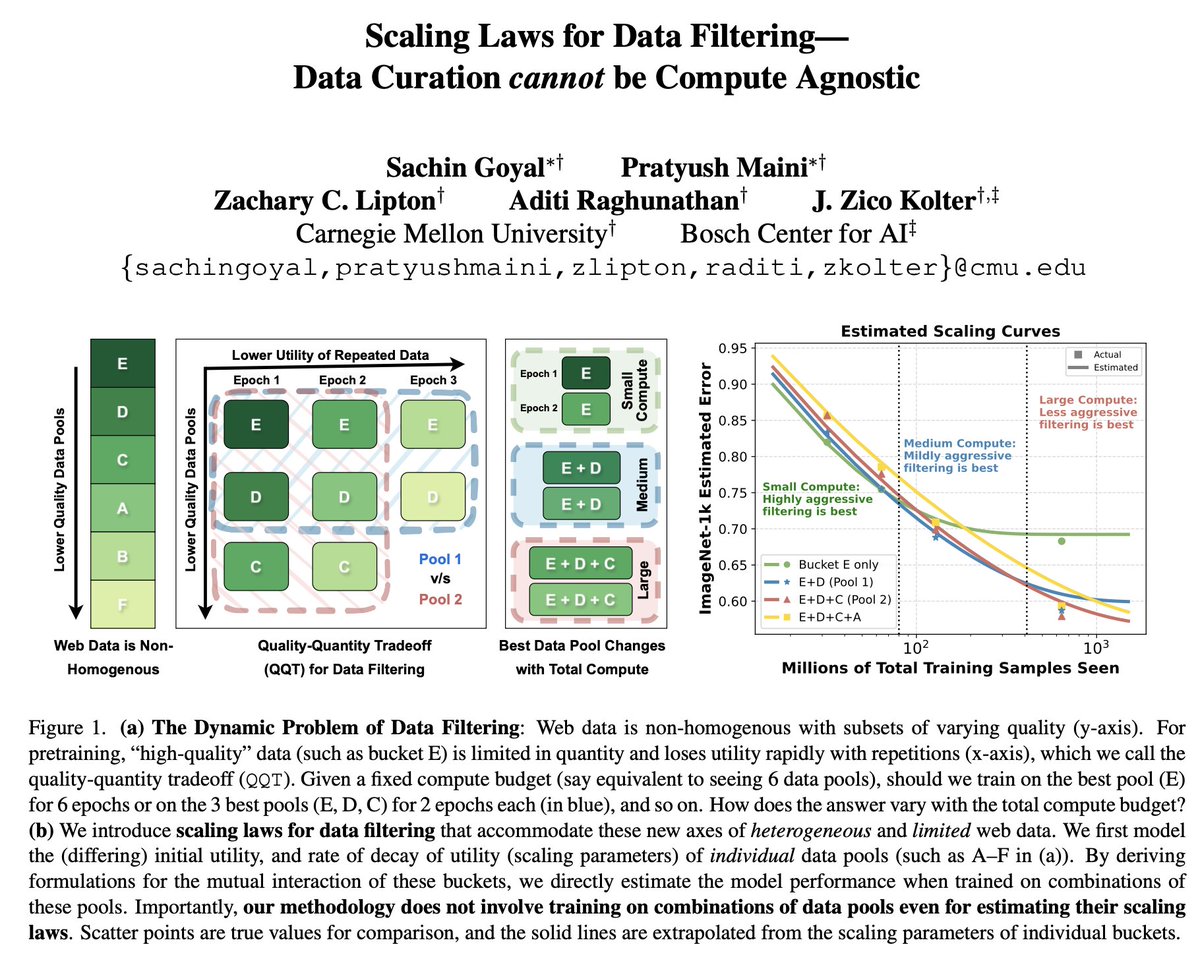

How do you balance repeat training on high quality data versus adding more low quality data to the mix? And how much do you train on each type? Pratyush Maini and Sachin Goyal provide scaling laws for such settings. Really excited about the work!

1/ 🥁Scaling Laws for Data Filtering 🥁

TLDR: Data Curation *cannot* be compute agnostic!

In our #CVPR2024 paper, we develop the first scaling laws for heterogeneous & limited web data.

w/Sachin Goyal Zachary Lipton Aditi Raghunathan Zico Kolter

📝:arxiv.org/abs/2404.07177

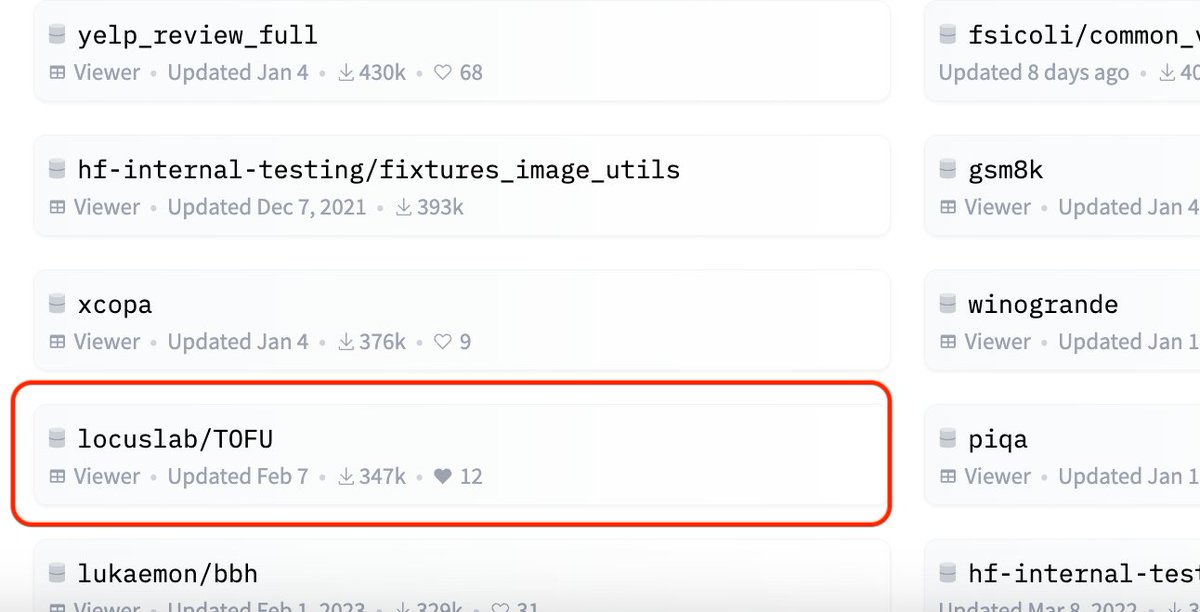

🤯The TOFU dataset (locuslab.github.io/tofu) had 300k+ downloads last month, and is in Top 20 most downloaded datasets on Hugging Face📈. This is crazy given how small the LLM unlearning community is compared to, say, LLM evals (for GSM8k). Excited to see what y'all are building!

🗣️ “Next-token predictors can’t plan!” ⚔️ “False! Every distribution is expressible as product of next-token probabilities!” 🗣️

In work w/ Gregor Bachmann , we carefully flesh out this emerging, fragmented debate & articulate a key new failure. 🔴 arxiv.org/abs/2403.06963

Excited to share our new paper where we study the intriguing phenomenon of massive activations in LLMs.

I hope our findings can offer a fresh perspective into understanding the internal representations of these powerful models.

Work with Xinlei Chen Zico Kolter Zhuang Liu.

We are excited to announce this year’s keynote speakers for #MLSys2024 : Jeff Dean Jeff Dean (@🏡), Zico Kolter Zico Kolter, and Yejin Choi Yejin Choi! MLSys this year will be held in Santa Clara on May 13–16. More details at mlsys.org.

The ICML Conference 2024 Ethics Chairs, Kristian Lum and Lauren Oakden-Rayner 🏳️⚧️, wrote a blog about the ethics review. Helpful for all authors and reviewers at ICML to better understand the process! medium.com/@icml2024pc/et… . . .

I've made some substantial updates to my chatllm-vscode extension (long-form LLM chats as VSCode notebooks):

1. GPT-4Vision + Dall-E support

2. ollama support to use local LLMs (including LLaVA for vision)

3. Azure API support (including via SSO)

Link: marketplace.visualstudio.com/items?itemName…